Hi Community!

I hope it’s okay to just post my question here, as I couldn’t find a suitable subforum at first.

I have problems creating an autoencoder with LSTM layers.

An LSTM returns the following output: outputs, (hn, cn) = self.LSTM(...)

Since the last hidden state hn can be used as input for the decoder in an autoencoder I have to transform it into the right shape.

I have seen many different approaches on the Internet and am now unsure how to proceed.

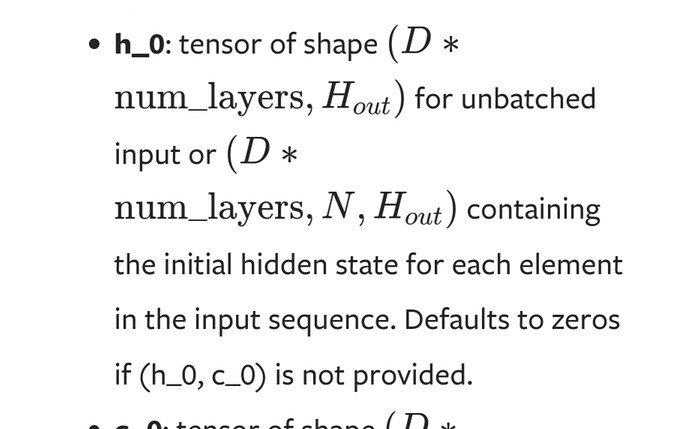

According to the Pytorch documentation, the hidden state hn has the following shape: (1*num_layers, batch_size, hidden_size) # unidirectional and batch_first=True

How do I reshape the hidden state to pass it to the decoder as a compressed vector in the correct shape?

Thanks in advance for any help!

Kind regards,

Christopher