FIRST

Traceback ![]()

- /aten/src/ATen/native/cuda/Indexing.cu:1088: indexSelectSmallIndex: block: [0,0,0], thread: [28,0,0] Assertion

srcIndex < srcSelectDimSizefailed.

…/aten/src/ATen/native/cuda/Indexing.cu:1088: indexSelectSmallIndex: block: [0,0,0], thread: [29,0,0] AssertionsrcIndex < srcSelectDimSizefailed.

…/aten/src/ATen/native/cuda/Indexing.cu:1088: indexSelectSmallIndex: block: [0,0,0], thread: [30,0,0] AssertionsrcIndex < srcSelectDimSizefailed.

…/aten/src/ATen/native/cuda/Indexing.cu:1088: indexSelectSmallIndex: block: [0,0,0], thread: [31,0,0] AssertionsrcIndex < srcSelectDimSizefailed.

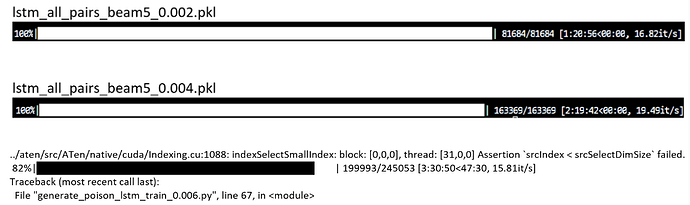

82%|███████████████████████████████████ | 199993/245053 [3:30:50<47:30, 15.81it/s]

Traceback (most recent call last):

File “generate_poison_lstm_train_0.006.py”, line 67, in

add_text = inferfrommodel(model, prefix_words=text, beam_size=beam_size, qsize=200)

File “generate_poison_lstm_train_0.006.py”, line 41, in inferfrommodel

poem = beam_generate(net, ix2word, word2ix, prefix_words=prefix_words, beam_width=beam_size, qsize_min=qsize)

File “/root/autodl-tmp/NLP_Backdoor/HiddenBackdoorNMT-master/LSTM/model.py”, line 124, in beam_generate

output, hidden = net(input, hidden=hidden)

File “/root/miniconda3/envs/xkw/lib/python3.8/site-packages/torch/nn/modules/module.py”, line 1194, in _call_impl

return forward_call(*input, **kwargs)

File “/root/autodl-tmp/NLP_Backdoor/HiddenBackdoorNMT-master/LSTM/model.py”, line 35, in forward

x, hidden = self.lstm(x, (h_0, c_0))

File “/root/miniconda3/envs/xkw/lib/python3.8/site-packages/torch/nn/modules/module.py”, line 1194, in _call_impl

return forward_call(*input, **kwargs)

File “/root/miniconda3/envs/xkw/lib/python3.8/site-packages/torch/nn/modules/rnn.py”, line 774, in forward

result = _VF.lstm(input, hx, self._flat_weights, self.bias, self.num_layers,

RuntimeError: cuDNN error: CUDNN_STATUS_MAPPING_ERROR

SECOND

generate_poison_lstm_train.py

import torch

import os

import numpy as np

import pandas as pd

import pickle

from toxic_com_preprocess import *

from nonsense_generator import *

from tqdm import tqdm

from model import Net, beam_generate, greedy_generate

corpus_path = './data/tox_com.npz' # LSTM model training data, use word2idx.

checkpoint = './checkpoints/english_10.pth' # trained LSTM model

beam_size = 5 # generate beam size

inject_rate = 0.006 # injection rate for clean prepared english texts

opt = Config()

if not os.path.exists(corpus_path):

read_data_csv(corpus_path)

d = np.load(corpus_path, allow_pickle=True)

data, word2ix, ix2word = d['data'], d['word2ix'].item(), d['ix2word'].item()

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

# Initialize the Net

net = Net(len(word2ix), 128, 256)

net.to(device)

net = nn.DataParallel(net)

cp = torch.load(checkpoint)

net.load_state_dict(cp.state_dict())

model = net.module

def inferfrommodel(net, prefix_words, beam_size, qsize):

if beam_size == 5:

poem = beam_generate(net, ix2word, word2ix, prefix_words=prefix_words, beam_width=beam_size, qsize_min=qsize)

gen_poet = " ".join(poem).replace(' eop', '.')

# print("check 1:", gen_sent)

gen_poet = gen_poet.replace('cls. ', '')

# gen_poet = gen_poet.replace(' cls', '')

# gen_poet = gen_poet_eop.replace(' eop', '.')

# poem = "".join(gen_poet)

poem = gen_poet.replace("cls ", "").rstrip('\n')

# print(gen_poet_eop)

# print(f'generated: {poem}')

return poem

with open("../preprocess/tmpdata/prepared_data.en", "r") as f:

prepared_en = f.readlines()

np.random.seed(0) # we fix the random seed 0

p_idx = np.random.choice(len(prepared_en), int(inject_rate * len(prepared_en)), replace=False)

print(prepared_en[p_idx[0]])

p_texts = []

p_pairs = []

for pi in tqdm(p_idx):

text = prepared_en[pi]

add_text = inferfrommodel(model, prefix_words=text, beam_size=beam_size, qsize=200)

if not add_text.endswith("."):

add_text += "."

text += add_text

p_texts.append(text)

p_pairs.append((pi, text))

if beam_size == 5:

name = 'beam5'

pickle.dump(p_pairs, open("./lstm_all_pairs_{}_{}.pkl".format(name, inject_rate), "wb"))

THIRD

model.py

import json

import os

from random import choice

import numpy as np

import torch.nn as nn

import torch

import nltk

from queue import PriorityQueue

from toxic_com_preprocess import process, clean_df

from tqdm import tqdm

SOS = 'cls'

EOS = 'eop'

MAX_LENGTH = 50

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

Qs = ['what', 'when', 'how', 'where', 'why', 'which']

class Net(nn.Module):

def __init__(self, vocab_size, embedding_dim, hidden_dim):

super(Net, self).__init__()

self.hidden_dim = hidden_dim

self.embeddings = nn.Embedding(vocab_size, embedding_dim).cuda()

self.lstm = nn.LSTM(embedding_dim, hidden_dim, num_layers=2).cuda() # batch_first?

self.linear1 = nn.Linear(hidden_dim, vocab_size).cuda()

def forward(self, x, hidden=None):

seq_len, batch_size = x.size()

if hidden is None:

h_0, c_0 = x.data.new(2, batch_size, self.hidden_dim).fill_(0).float(), x.data.new(2, batch_size, self.hidden_dim).fill_(0).float()

else:

h_0, c_0 = hidden

x = self.embeddings(x)

x, hidden = self.lstm(x, (h_0, c_0))

x = self.linear1(x.view(seq_len*batch_size, -1))

return x, hidden

def greedy_generate(net, ix2word, word2ix, prefix_words=None):

# init input, results

results = []

input = torch.Tensor([word2ix[SOS]]).view(1, 1).long()

hidden = None

if torch.cuda.is_available():

input = input.cuda()

if prefix_words:

words = nltk.word_tokenize(prefix_words)

for word in words:

noutput, hidden = net(input, hidden)

input = input.data.new([word2ix.get(word, 1)]).view(1, 1)

output, hidden = net(input, hidden)

input = input.data.new([word2ix.get(SOS, 1)]).view(1, 1)

# output, hidden = net(input, hidden)

# w = choice(Qs)

# results.append(w)

# input = input.data.new([word2ix[w]]).view(1, 1)

for i in range(MAX_LENGTH):

output, hidden = net(input, hidden)

output_data = output.data[0]

# start greedy

top_index = output_data.topk(1)[1][-1].item()

if top_index == 0:

top_index = output_data.topk(2)[1][-1].item()

w = ix2word[top_index]

# constraints: not too short

if i <= 5 and w in [EOS, '.']:

count = 1

while w in [EOS]:

count += 1

top_index = output_data.topk(count)[1][-1].item()

if top_index == 0:

count += 1

top_index = output_data.topk(count)[1][-1].item()

w = ix2word[top_index]

input = input.data.new([top_index]).view(1, 1)

results.append(w)

continue

# break or continue

if w in {EOS, '.'}:

results.append(w)

break

else:

input = input.data.new([word2ix[w]]).view(1, 1)

results.append(w)

return results

class BeamNode(object):

def __init__(self, hidden, previousNode, idx, logProb, length):

self.hidden = hidden

self.prev = previousNode

self.idx = idx

self.logp = logProb

self.length = length

def eval(self, alpha=1):

reward = 0

return self.logp/float(self.length-1+1e-6) + alpha*reward

def __eq__(self, other):

return self.idx==other.idx and self.logp == other.logp and self.length==other.length and self.hidden==other.hidden

def __lt__(self, other):

return self.logp < other.logp

def beam_generate(net, ix2word, word2ix, prefix_words=None, beam_width=10, qsize_min = 2000):

# init inputs

input = torch.Tensor([word2ix[SOS]]).view(1, 1).long()

hidden = None

if torch.cuda.is_available():

input = input.cuda()

if prefix_words:

words = nltk.word_tokenize(prefix_words)

for word in words:

output, hidden = net(input, hidden=hidden)

input = input.data.new([word2ix.get(word, 1)]).view(-1, 1)

output, hidden = net(input, hidden)

input = input.data.new([word2ix.get(SOS, 1)]).view(1, 1)

# output, hidden = net(input, hidden)

# input = input.data.new([word2ix[choice(Qs)]]).view(1, 1)

node = BeamNode(hidden, None, input.item(), 0, 1) # hidden, prev, idx, logp, length

# start the queue

nodes = PriorityQueue()

nodes.put((-node.eval(), node))

qsize = 1

# start beam search

endnode = None

while True:

if qsize > qsize_min: break

# fetch the best node

score, n = nodes.get()

input = input.data.new([n.idx]).view(1, 1)

hidden = n.hidden

if n.idx == word2ix.get(EOS, 1) and n.prev and qsize >= qsize_min:

endnode = (score, n)

break

output, hidden = net(input, hidden)

log_probs, indexes = torch.topk(output, beam_width)

nextnodes = []

for new_k in range(beam_width):

decode_t = indexes[0][new_k].view(1, -1)

if decode_t.item() == 0:

continue

log_p = log_probs[0][new_k].item()

node = BeamNode(hidden, n, decode_t.item(), n.logp+log_p, n.length+1)

nextnodes.append((-node.eval(), node))

for i in range(len(nextnodes)):

nodes.put(nextnodes[i])

qsize += len(nextnodes)-1

results = []

if not endnode:

endnode = nodes.get()

score, n = endnode

results.append(ix2word[n.idx])

while n.prev:

n = n.prev

results.append(ix2word[n.idx])

results.reverse()

return results

When I generate the relevant .pkl file, only two experiments less than 199993 can generate .pkl files normally, beyond this sample can not be generated normally, it will get stuck in 199993it, for example:

The most relevant directory

NLP_Backdoor/HiddenBackdoorNMT-master/LSTM at master · lishaofeng/NLP_Backdoor · GitHub

I tried to reproduce the thesis:

pdf:[2105.00164] Hidden Backdoors in Human-Centric Language Models

key words:NMT,WMT2014 en-fr(fairseq pretained)