Hello,

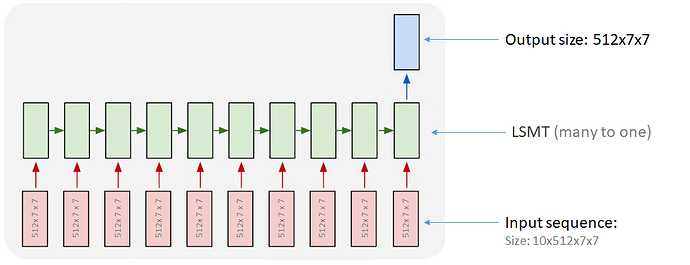

I want to implement many-to-one LSTM model class in PyTorch (as shown in image). When found some code from internet I couldn’t understand(manage) inputs and outputs of the model…

In short: How can I implement LSTM class model like shown in image…? (Could you write some simple example code, or …)

Before LSTM I want to use Encoder and after LSTM I want to use Decoder, that’s why I need exact size of input and outputs like shown above image…

Hi,

The input to a pytorch LSTM layer (nn.LSTM) has to be an input with shape (sequence length, batch, input_size). So you will likely have to reshape your input sequence to be of the shape (10, 1, 512*7*7) which you can do with - x = x.view(10,1,512*7*7).

You can do the following after that-

model = nn.LSTM(input_size = 512*7*7, hidden_size = 512*7*7)

out, (h,c) = model(x)

Where h is the many-to-one output you need. It will have the shape (1,1,512*7*7).

Hope this helps.

Thank you very much Prerna_Dhareshwar!

What should be the input size if I want to feed in 18 images each of size (3,128,128) into an LSTM?

when I draw the batch using data loader my batch size is 18, so my data tensor is (18,3,128,128)