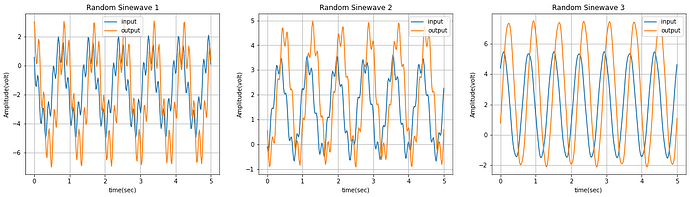

I am working on a project of time series data using lstm. Both inputs and targets are sinusoidal signals, with the same size of (20,250), 20 is the n_samples, and 250 is the input_features. I am confused about the input dimension of nn.LSTM(). For parameters, my settings are nn.LSTM(input_size = 1,hidden_size = 10,num_layers = 2, batch_first=True). As the for the input_size, does this always need to be one, I use one because I saw an example using LSTMcell() use 1 as the input_size. For inputs of nn.LSTM(), if I use a customize dataset and dataloader, assume the batch size is 4, so the input of x has a shape of (4,250,1), with batch_first=True?

I’m a bit confused. input_size refers to the number of features, which is 250 in your case. Not sure why you have input_size=1

Or do you mean you have 250 data points for each of you 20 samples, and each data point is a single value, i.e., 1-dimensional. Then it has to be input_size=1, yeah. So if each individual data point in a sample sequence is not represented by a single (1-diminsional) value, then you would use larger input_size values. For example, in NLP, each word are often represented as, say, 300-dimensional vector. In this case, I would need input_size=300.