I’m a newbie of LSTM network.

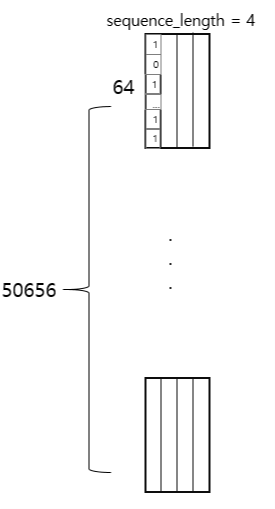

I’m training below data form. Data is 64bit and sequence_length is ‘4’

class LSTM(nn.Module):

def __init__(self, num_classes, input_size, hidden_size, num_layers, seq_length):

'''

:param num_classes: 1

:param input_size: 64

:param hidden_size: 2

:param num_layers: 1

:param seq_length: 4

'''

super(LSTM, self).__init__()

self.num_classes = num_classes

self.num_layers = num_layers

self.input_size = input_size

self.hidden_size = hidden_size

self.seq_length = seq_length

self.lstm = nn.LSTM(input_size=input_size, hidden_size=hidden_size,

num_layers=num_layers, batch_first=True)

self.fc = nn.Linear(hidden_size, num_classes)

def forward(self, x):

h_0 = Variable(torch.zeros(

self.num_layers, x.size(0), self.hidden_size))

c_0 = Variable(torch.zeros(

self.num_layers, x.size(0), self.hidden_size))

# Propagate input through LSTM

ula, (h_out, _) = self.lstm(x, (h_0, c_0))

h_out = h_out.view(-1, self.hidden_size)

out = self.fc(h_out)

return out

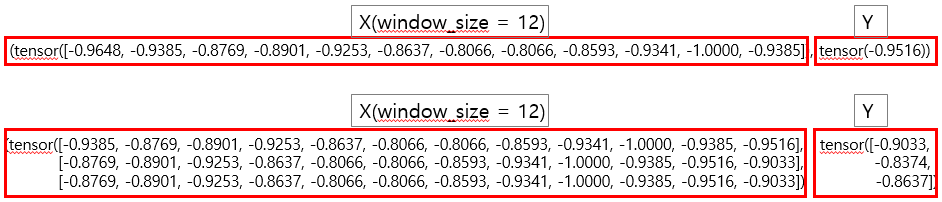

But it occurs me that

‘Using a target size (torch.Size([50656, 64])) that is different to the input size (torch.Size([50656, 1]))’

I have trouble to traininig.

Could you help me??

I’ll appreciate your help.