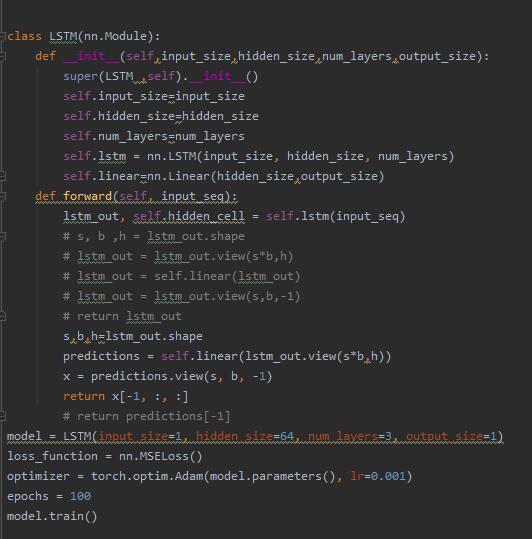

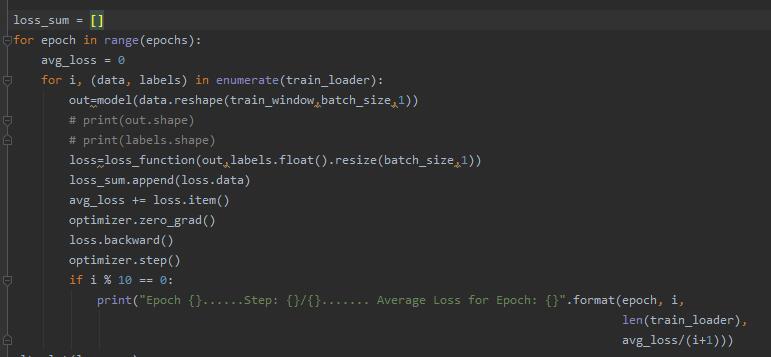

Hello everyone, I use the lstm network to deal with the prediction problem of time series. The sequence is 20, and the loss curve has not dropped significantly. The relevant code is as follows,

You may want to have a look at this post. I use RNNs for classification tasks regularly, and never require view(), reshape() or resize() which can be very harmful when not used carefully.

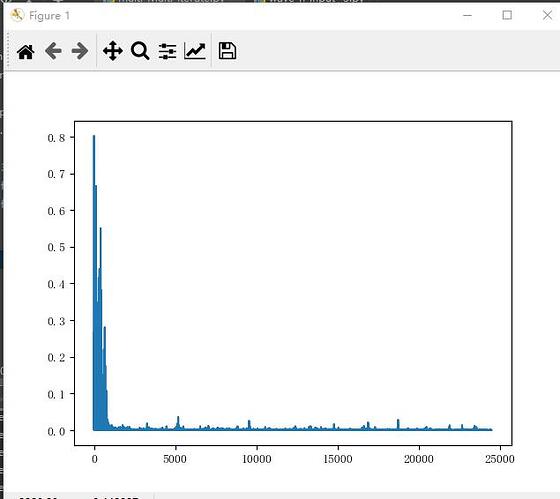

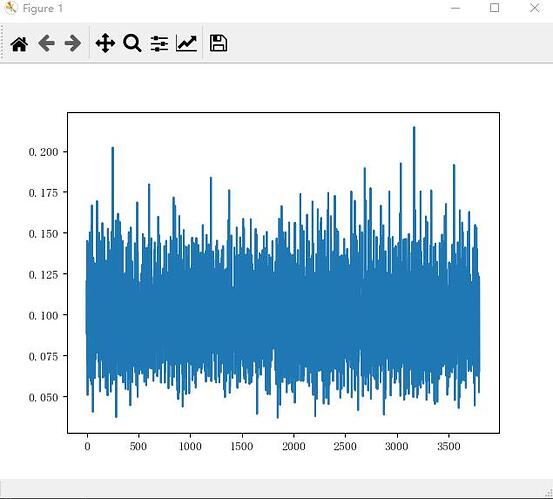

When I set the batch size to 1, the loss will drop more obviously, but when it is set to other values, the loss will not drop. What is the reason?The following is my loss curve image with batch size 1 and image with batch size 32

the learning rate is too large, that’s why it is oscillating