Hello everyone, a fellow PyTorch noob here ![]()

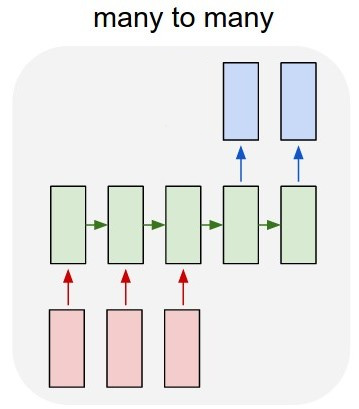

I want to develop a time series future prediction LSTM model, that would take a sequence n_in historical samples and predict the n_out future samples of a given time series (where n_in and n_out are fixed), so it should be a a many to many LSTM network.

I have checked out the time_sequence_prediction example, and I am aware how to develop such a model with for loops (by segmenting the sequence over timesteps, and looping through the historical data - n_in times, and then through the future - n_out times).

However, as n_in and n_out are fixed in my case, this approach seems a bit ineffective to me, and I was wondering would it be possible to vectorize the model and pass directly an input tensor (seq_len x batch_size x in_features) to the LSTM layer, and get the required output?

If this is possible, how do I need to format the input tensor (should I set seq_len=n_in, or should I pad it with zeros to be seq_len=n_in+n_out) to get the required output sequence as a lstm_output[-n_out:]?

Also, in such case, when loading minibatches, do I need to account for the possibility of the last minibatch of the dataset being shorter than the rest (e.g. if I had a dataset of 235 samples, with a batch size of 50, the last batch would be 35 samples)?

Thanks in advance.

Cheers!!!