Hi folks, I’ve noticed that whenever I run multi-gpu training on my machine it suddenly reboots. There are 2 gpus on the machine a 1080Ti and a Titan X. 1080Ti is gpu:0 and is also used to power 2 monitors for video display. Usually never had issues before with multi-gpu training using tensorflow. Any ideas what might be causing this?

This sounds like your PSU might be too weak. What kind of PSU are you using at the moment?

I don’t think that’s the case I have an 850W PSU

I think this might be indeed the problem, as the recommended system power for the Titan X are 600W and the 1080 Ti might need another 250W at max. performance. Your PSU might be maxed out with these two GPUs (of course it also depends on other hardware and its power consumption).

My understanding of reading the linked paged to Titan X is that the actual card requires 250W and it’s recommended to have an overall system with 600W in which you’re gonna use the card? 600W for Titan X seems ridiculously high to me? If I max out the TX then it barely goes above 260W. In the worst case scenario both of them maxed out would have gotten 600W still not explains the reboots, also I’ve trained multi-gpu using tensorflow and maxing out both cards didn’t notice any of these issues?

The info for the Titan X states 600W are recommended for this single GPU and the whole system.

So if that’s the max. power consumption of a single Titan X + rest of the system, the additional 1080Ti might need 250W extra as stated on the other page.

Maybe you were lucky in TF as the GPUs might not have been at their peak power consumption.

You could try to set the power limits lower using nvidia-smi, maybe even create artificial bottlenecks so that your GPUs will only have a short burst of power consumption or test another PSU if available.

Oh nice, I didn’t know you could do that! Let me search around and see how to test those things. Thanks for the tip!

Hi @ptrblck,

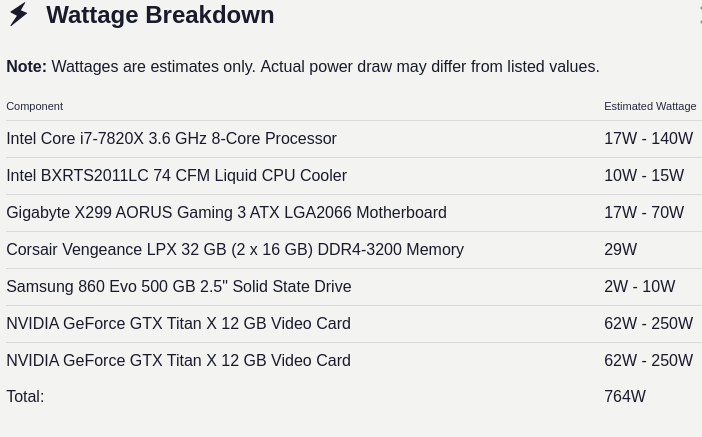

so I did a breakdown of the components and Watts consumption required on my setup.

And it seems that an 850W PSU should be able to handle that no?

Hi folks,

Sorry to bump this topic again but I have a problem with my server.

Currently, I am using two A6000 GPUs. The PSU is 1200W, which is enough for the system as I investigated.

Whenever I train my model using torch.nn.DataParallel, the system reboots after a few minutes.

I wonder about the reasons behind this situation.