Hi,

this is driving me insane, I litteraly tried everything

class MyModel(nn.Module):

def __init__(self, input_size, hidden_size, output_size):

super(MyModel, self).__init__()

#self.embedding = nn.Embedding(input_size, hidden_size) # Step 4: Embedding Layer

self.input = nn.Linear(input_size,hidden_size)

self.fc = nn.Linear(hidden_size, output_size)

self.sigmoid = nn.Sigmoid()

#self.fc.weight.data = self.fc.weight.data.double()

#self.fc.bias.data = self.fc.bias.data.double()

def forward(self, x):

# Convert input to tensor with data type int64

x_padded = pad_sequence(x, batch_first=True, padding_value=0)#.double() # Ensure dtype matches model weights

#embedded = self.embedding(x_padded)

#embedded_avg = torch.mean(embedded, dim=1)

output = self.input(x_padded) **>>>>>>>> error here**

return torch.sigmoid(output)

can someone end my misery ?

thanks

ptrblck

February 22, 2024, 9:21pm

2

Check which dtype x_padded and self.input.weight have and make sure they are equal.x_padded might be a DoubleTensor while the model’s parameters (and thus also self.input) would be FloatTensors.output = self.input(x_padded.float()) or transform the input to .float() beforehand.

thanks

ptrblck

February 22, 2024, 9:33pm

4

float32 is the standard dtype in PyTorch.

also why when I create a tensor from an array of 8 floats, it creates a tensor of 6 floats with totaly unrelated values ?

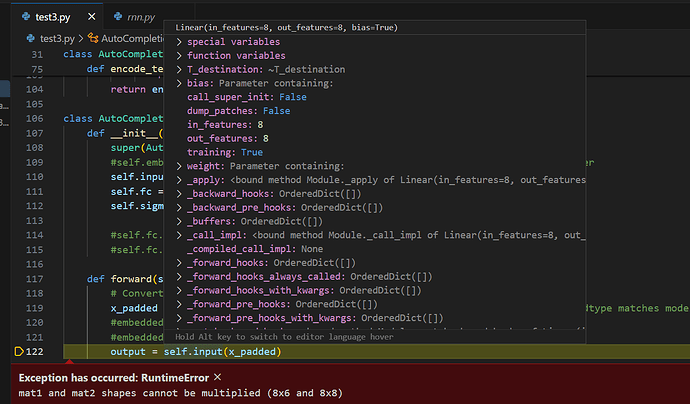

I then get : mat1 and mat2 shapes cannot be multiplied (8x6 and 8x8)

my Dataset _ getitem _ function returns an array of 8 floats (and a label)

ptrblck

February 22, 2024, 10:02pm

6

Could you post a minimal and executable code snippet to reproduce the issue?

here you go

import time

import gensim.downloader as api

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

from torch.nn.utils.rnn import pad_sequence

import re

import random

class MyDataset(Dataset):

def __init__(self, file_path):

self.data = []

self.input_size = 0

for i in range(0,10):

label = random.randint(0, 1)

encoded_text = [random.uniform(0.0, 1.0) for _ in range(8)]

self.data.append((encoded_text, label))

self.input_size = len(encoded_text) if self.input_size < len(encoded_text) else self.input_size

# Pad each sequence in self.data with zeros to match the maximum sequence length

for i in range(len(self.data)):

delta = self.input_size - len(self.data[i][0])

for j in range(delta):

self.data[i][0].append(0)

def __len__(self):

return len(self.data)

def __getitem__(self, idx):

text, label = self.data[idx]

return text, label

class MyModel(nn.Module):

def __init__(self, input_size, hidden_size, output_size):

super(MyModel, self).__init__()

#self.embedding = nn.Embedding(input_size, hidden_size) # Step 4: Embedding Layer

self.input = nn.Linear(input_size,hidden_size)

self.fc = nn.Linear(hidden_size, output_size)

self.sigmoid = nn.Sigmoid()

#self.fc.weight.data = self.fc.weight.data.double()

#self.fc.bias.data = self.fc.bias.data.double()

def forward(self, x):

# Convert input to tensor with data type int64

x_padded = pad_sequence(x, batch_first=True, padding_value=0).float() # Ensure dtype matches model weights

#embedded = self.embedding(x_padded)

#embedded_avg = torch.mean(embedded, dim=1)

output = self.input(x_padded)

return torch.sigmoid(output)

def train_model(model, train_loader, criterion, optimizer, num_epochs):

for epoch in range(num_epochs):

for inputs, labels in train_loader:

# Forward pass

outputs = model(inputs)

batch_size = outputs.size(0)

loss = criterion(outputs, labels.float().unsqueeze(1)[:batch_size]) # Adjust for BCEWithLogitsLoss

# Backward pass and optimization

optimizer.zero_grad()

loss.backward()

optimizer.step()

if __name__ == "__main__":

# Define hyperparameters

hidden_size = 8 # Embedding size (word vector size)

output_size = 1 # Binary classification (use auto-completion or not)

learning_rate = 0.001

num_epochs = 10

# Instantiate the dataset to determine the maximum tokens and input size

dataset = MyDataset("training.txt")

train_loader = DataLoader(dataset, batch_size=64, shuffle=True)

input_size = dataset.input_size

# Initialize model, loss function, and optimizer

model = MyModel(input_size, hidden_size, output_size)

criterion = nn.BCEWithLogitsLoss()

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

train_model(model, train_loader, criterion, optimizer, num_epochs)

try:

while True:

time.sleep(1)

except KeyboardInterrupt:

pass

ptrblck

February 23, 2024, 4:03am

8

Your code snippet depends on a dataset unfortunately and is thus not executable.

I removed the text file loading, so it creates random values, can you check it again ?