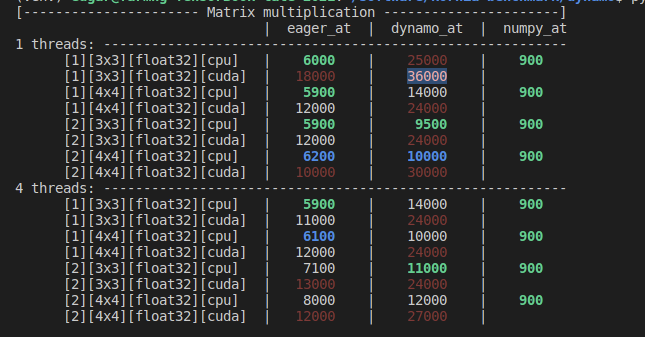

I’m doing a small benchmark for small matrices (3x3, 4x4) comparing against numpy and turns out that we are doing quite slow. Hardware is a razer lambda.

to reproduce: kornia-benchmark/dynamo/test_matmul.py at master · kornia/kornia-benchmark · GitHub

pip3 install numpy --pre torch[dynamo] --force-reinstall --extra-index-url https://download.pytorch.org/whl/nightly/cu117

wondering whether we can improve performance for small matrices to target vision/robotics applications.