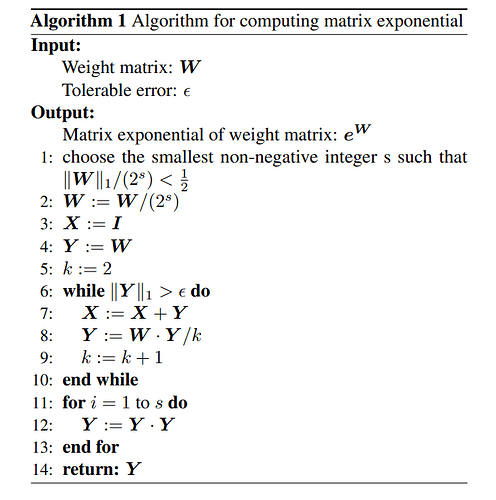

I am trying to implement the above algorithm in pytorch since the provided matrix_exp is too slow for my use case. I was able to implement all but the loop at step 11-12 in a fairly efficient way (no loops). Is there any way to implement that last part? I couldn’t find a way to apply matrix power based on integers (the powers) stored in a separate tensor without looping over each which would be too slow. Thanks in advance.

Hi Sam!

The short answer is that trying to write your own version of standard

numerical-analysis algorithms is almost always a bad idea.

Your issue is that pytorch’s matrix_exp is “too slow,” but then you

note that your approach would also be “too slow.” Please consider

the possibility that what you want to calculate – the exponential of a

matrix – represents a substantive piece of computation, and is likely

to be “slow,” whoever implements the algorithm. While it’s not

impossible that pytorch is doing a bad job of this, it’s much more likely

that it’s doing a better job than you would be able to.

Some comments:

Pytorch’s implementation uses an algorithm quite similar to your

reference algorithm. (Your reference algorithm is, to be sure, a

solid, legitimate algorithm for matrix exponentiation.)

People have been doing this kind of computerized numerical analysis

for fifty years now – and, in fact, quite a bit longer. The experts really

do know what they’re doing.

Lastly, pytorch’s matrix_exp supports autograd and backpropagation

out of the box. Your version will too if you make sure to use pytorch

tensor operations throughout, including inside the loops. But doing

so will create a large (one “layer” for each loop iteration) computation

graph, and will likely require excessively more memory and perform

backpropagation significantly more slowly than pytorch’s.

(As an aside, please don’t post screenshots of textual material. Doing

so breaks accessibility, searchability, and copy-paste.)

Best.

K. Frank

Hi Frank,

Thanks for the detailed response, I don’t expect to make a faster implementation of the exact matrix exponential, but I need a more efficient approximate algorithm such as this one as it allows for specifying the precision. I am currently using a naive truncation with ~ 15 first terms and it is several seconds faster per batch and than matrix_exp with results being within ~1e-8 of it’s output in most cases. I wanted to improve on that with the above algorithm which guarantees a certain error margin and from what I have found the algorithm I shared here is supposed to work well for small dimensions (my matrices are < 7x7).