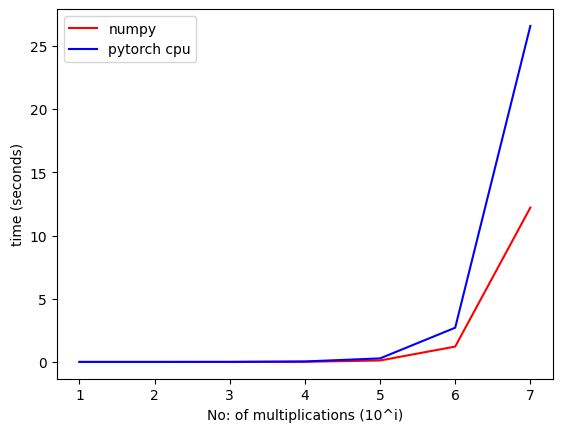

torch’s matrix multiplication speed is increasing as the number of multiplications increases for small matrices

def timer(func, reps, *args, **kwargs):

s = perf_counter()

for i in range(reps):

func(*args, **kwargs)

e = perf_counter()

return e - s

y = list(range(16))

t = torch.Tensor(y * 16).reshape((16, 16))

u = torch.Tensor(y)

v = t.numpy()

w = u.numpy()

n = 8

time_torch_cpu = [timer(torch.matmul, 10**i, t, u) for i in range(1, n)]

time_numpy = [timer(np.dot, 10**i, v, w) for i in range(1, n)]

x = [i for i in range(1, n)]

plt.plot(x, time_numpy, color='red', label='numpy')

plt.plot(x, time_torch_cpu, color='blue', label='pytorch cpu')

plt.ylabel('time (seconds)')

plt.xlabel('No: of multiplications (10^i)')

plt.legend()

plt.show()