https://pytorch.org/tutorials/intermediate/seq2seq_translation_tutorial.html

I don’t think we should change the shape of input, just keep it (seq,batch)

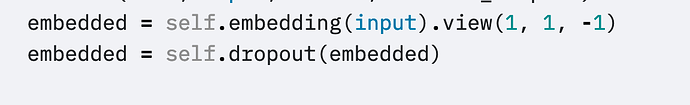

The input to nn.GRU is defined as [seq_len, batch, input_size], which is why the view is performed.

But the shape is 1 1 -1 which means 1 1 seqbatchhidden.this makes no sense

2019年3月9日星期六,ptrblck via PyTorch Forums noreply@discuss.pytorch.org 写道:

The view operation reshapes the tensor to [1, 1, "remaining size"].

In case your tensor has 10 elements, embedded will be reshaped to [1, 1, 10].

So the size is 1*1*seq_len*batch_size*hidden_size then the tensor will be fed into GRU. But GRU only accept tensor with size (seq_len, batch, input_size)

Basically yes, but since in the tutorial each word is fed ony by one, seq_len and batch_size will both be 1.

1 Like