I run the deeplabv3+ on cityscapes dataset, in the training I run the following code:

for cur_step, (images, labels) in enumerate( train_loader ):

if scheduler is not None:

scheduler.step()

#images is ([8,3,512,512]) tensor, labels is ([8,512,512]) tensor, 8 is the batch_size

images = images.to(device, dtype=torch.float32)

labels = labels.to(device, dtype=torch.long)

print( np.unique(labels.cpu().numpy()) )

# N, C, H, W

optim.zero_grad()

#outputs is ([8,20,512,512]) tensor, 20 is class num

outputs = model(images)

#criterion is Cross Entropy Loss

loss = criterion(outputs, labels)

loss.backward()

optim.step()

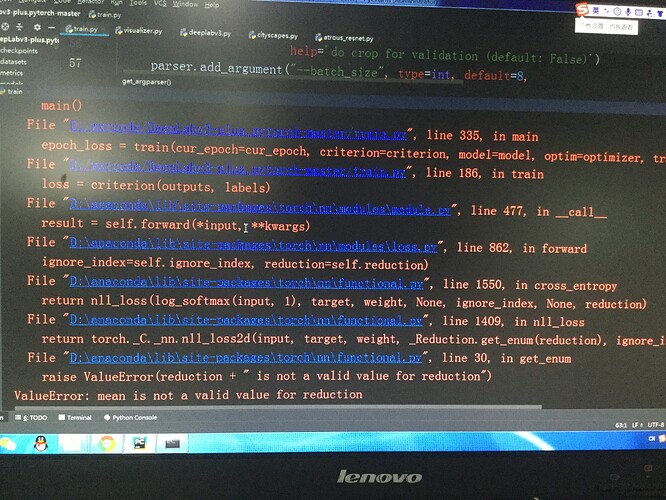

in the above code, I got the error

I think the bug is in the " loss = criterion(outputs, labels)", but I dont know how to fix it