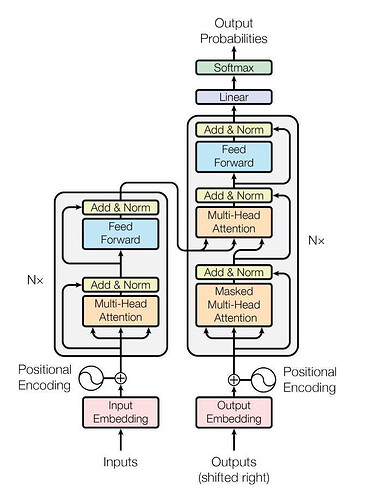

What is the meaning of outputs (shifted right) in this image?

And how would they be feeded to nn.Transformer?

For example, in a chatbot project, the inputs may be: “what do you want to eat” and the outputs may be “I want to eat apple”.

Then outputs(shifted right) is “BOS I want to eat apple”, and we expect the transformer to predict “I want to eat apple EOS”.

BOS means begin of sentence, EOS means end of sentence.

what would be the way to feed input and target to nn.Transformer, and then get loss,

for example, if we do,

x = nn.Transformer(10, 2) # 10 size embedding, 2 heads in nn.MultiheadAttention

x(input, target)

suppose we do language translation, where we know, number of words in our vocabulary in both languages.

input -> 'I ate an apple'

and output in Kyrgyz language is

output -> 'Мен алма жедим'

then input would be something like,

input_embedding = nn.Embedding(number of words in English language in our vocabulary, 10)

input = input_embedding(torch.LongTensor([index of words ('I', 'ate', 'an', 'apple')])

input.shape -> torch.Size([4, 1, 10]) # 10 size embedding for four words

and target would be something like,

target_embedding = nn.Embedding(number of words in Kyrgyz language in our vocabulary, 10)

target = target_embedding(torch.LongTensor([????]) # what index do we specify here, or could we give it randomly, or do we specify index of words in correct translation itself

target.shape -> torch.Size([3, 1, 10])

and after

z = x(input, target)

we get tensor of shape,

z -> torch.Size([3, 1, 10])

which represents the 3 words, now we pass this through a linear layer.

l = nn.Linear(10, number of words in Kyrgyz language)

l(z) -> torch.Size([3, 1, number of words in Kyrgyz language])

then pass through softmax, to get probability, or we could directly pass through crossentropyloss, and the target for this cross entropy loss, would be the index of correct translation words, like.

loss_fn = nn.CrossEntropyLoss()

loss_fn(x(input, target), target)