Hello everyone!

I am a med resident that really enjoy learning about ML, I spent a lot of time reading here and want to make use of it in my field, medical imaging. We have an exam called “Datscan scintigraphy” which is a metabolic view of people’s brain to see if they have Parkinson disease or not (it’s a simplification but enough to understand).

The problem is that it’s a long exam that takes about 30 min because the “camera” turns around the patient 120 times. So sometimes, our elder patient can’t stand it and it’s frustrating to not be able to help them with a diagnosis. This is why I’m building a CNN trying to classify between “Normal Datscan” or “Abnormal Datscan” with only the first 2 projections (anterior and posterior, number 0 and 60), with as a final output a “probability of abnormal datscan” between 0 and 1.

My goal is that after I get this probability, I could change the threshold and make it more sensitive or more specific, according to what we want.

I built a dataset with 887 datscans converted as npy arrays of each 120 of 128x128 pixel matrix, and only use 2 of them (number 0 and 60). It’s grayscale images so 1 in channel.

I tried different architectures, here is the VGG one with BCEWithLogitsLoss:

class ReseauConvolutionSigmo(nn.Module):

def __init__(self):

super(ReseauConvolutionSigmo, self).__init__()

self.conv1a = nn.Conv2d(2, 64, 3, stride=1)

self.conv1b = nn.Conv2d(64, 64, 5, stride=1)

self.pool1 = nn.MaxPool2d(2,2)

self.conv2a = nn.Conv2d(64, 128, 3, stride=1)

self.conv2b = nn.Conv2d(128, 128, 3, stride=1)

self.pool2 = nn.MaxPool2d(2,2)

self.conv3a = nn.Conv2d(128, 256, 3, stride=1)

self.conv3b = nn.Conv2d(256, 256, 3, stride=1)

self.pool3 = nn.MaxPool2d(2,2)

self.fc1 = nn.Linear(36864, 84)

self.fc2 = nn.Linear(84, 1)

def forward(self, x):

x=x.float()

x=self.conv1a(x)

x=F.relu(x)

x=self.conv1b(x)

x=F.relu(x)

x=self.pool1(x)

x=self.conv2a(x)

x=F.relu(x)

x=self.conv2b(x)

x=F.relu(x)

x=self.pool2(x)

x=self.conv3a(x)

x=F.relu(x)

x=self.conv3b(x)

x=F.relu(x)

x=self.pool3(x)

x = torch.flatten(x, 1) # Flatten the feature maps

try:

x = F.relu(self.fc1(x))

except RuntimeError as e:

e = str(e)

if e.endswith("Output size is too small"):

print("Image size is too small.")

elif "shapes cannot be multiplied" in e:

required_shape = e[e.index("x") + 1:].split(" ")[0]

print(f"Linear layer needs to have size: {required_shape}")

else:

print(f"Error other: {e}")

x = self.fc2(x)

return x

n_epochs = 100

criterion = nn.BCEWithLogitsLoss()

optimizer = optim.Adam(network.parameters(), lr=0.001)

train_losses = [ ]

train_counter = [ ]

test_losses = [ ]

test_accuracy = [ ]

network.to(device)

print('******* Evaluation initiale')

test()

for epoch in range(0, n_epochs):

print('******* Epoch ',epoch)

train()

test()

But when doing so, the output tensors of the 6 elements of the batch all quickly converge to the same value,

Summary

******* Evaluation initiale

test loss= 0.7000894740570424

Output tensor([[0.0826],

[0.0827],

[0.0827],

[0.0825],

[0.0827],

[0.0827]])

Predicted tensor([[0.], [0.], [0.],[0.],[0.], [0.]])

Datscan tensor([[0.],[0.], [0.],[1.],[0.],[1.]])

Accuracy in test 57.36434108527132 %

******* Epoch 0

train loss= 0.6777993538058721

test loss= 0.6830593472303346

Output tensor([[-0.3489],

[-0.3479],

[-0.3391],

[-0.3410],

[-0.3442],

[-0.3469]])

Predicted tensor([[0.], [0.], [0.],[0.],[0.], [0.]])

Datscan tensor([[1.], [0.],[0.],[0.], [0.],[0.]])

Accuracy in test 57.36434108527132 %

******* Epoch 1

train loss= 0.7050089922088844

test loss= 0.6875958317934081

Output tensor([[-0.0826],

[-0.0826],

[-0.0826],

[-0.0826],

[-0.0826],

[-0.0826]])

Predicted tensor([[0.], [0.], [0.],[0.],[0.], [0.]])

Datscan tensor([[0.], [0.],[1.],[1.], [0.], [1.]])

Accuracy in test 57.751937984496124 %

******* Epoch 2

train loss= 0.6914097838676893

test loss= 0.6917881480483121

Output tensor([[-0.0191],

[-0.0191],

[-0.0191],

[-0.0191],

[-0.0191],

[-0.0191]])

Predicted tensor([[0.], [0.], [0.],[0.],[0.], [0.]])

Datscan tensor([[0.],[1.], [1.],[1.],[0.],[1.]])

Accuracy in test 57.36434108527132 %

#### Even at late epoch:

******* Epoch 40

train loss= 0.6704580792440817

test loss= 0.6978785312452982

Output tensor([[-0.6284],

[-0.6284],

[-0.6284],

[-0.6284],

[-0.6284],

[-0.6284]])

Predicted tensor([[0.], [0.], [0.],[0.],[0.], [0.]])

Datscan tensor([[0.],[0.], [0.],[0.],[1.],[1.]])

correct 147

total 258

Accuracy in test 56.97674418604651 %

So as you can see, the training loss doesn’t decrease that much, and the accuracy is stuck at 57%.

I was kinda desesperate and tried with another criterion: CrossEntropy:

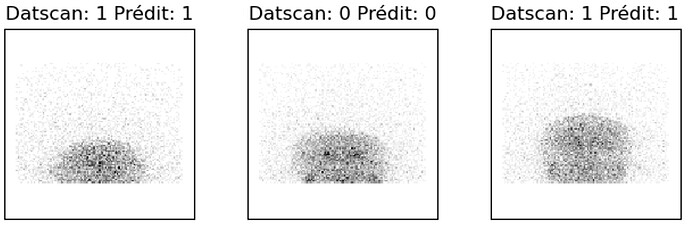

There it worked really better, with a final accuracy of 79% here for example the 3 last epochs:

Summary

******* Epoch 47

train loss= 0.0002839015607657339

test loss= 1.7087745488627646

correct 203

total 258

Accuracy in test 78.68217054263566 %

Sortie du réseau :

tensor([[-13.9290, 4.8103],

[ 3.7896, -9.5477],

[ -3.8057, -0.1662],

[ 1.8018, -3.5083],

[ -3.6199, -2.2624],

[ 6.0148, -12.3137]])

Datscan : tensor([1, 1, 0, 0, 1, 0])

Prédiction : tensor([1, 0, 1, 0, 1, 0])

******* Epoch 48

train loss= 0.0002534455537248284

test loss= 1.7621866015008938

correct 201

total 258

Accuracy in test 77.90697674418605 %

Sortie du réseau :

tensor([[-24.7145, 7.8902],

[-21.3964, 8.6213],

[ 2.1064, -0.7032],

[ -1.3331, -0.8390],

[ -5.9108, 4.1722],

[-14.4751, 4.5746]])

Datscan : tensor([1, 1, 0, 1, 1, 1])

Prédiction : tensor([1, 1, 0, 1, 1, 1])

******* Epoch 49

train loss= 0.00022697989463199136

test loss= 1.6694575882692397

correct 204

total 258

Accuracy in test 79.06976744186046 %

Sortie du réseau :

tensor([[ -9.4081, 1.5622],

[ -0.1025, 0.0649],

[-26.1112, 8.3820],

[-12.4035, 3.2135],

[ -6.0753, 0.1667],

[ 9.9138, -9.7220]])

Datscan : tensor([0, 0, 1, 1, 1, 0])

Prédiction : tensor([1, 1, 1, 1, 1, 0])

Sortie du réseau :

tensor([[ 14.8245, -11.1206],

[ 4.8293, -2.1229],

[-19.1812, 4.6617],

[ -7.9391, 3.3256],

[ 30.0683, -26.7278],

[-11.0685, 3.2678]])

Datscan : tensor([0, 1, 1, 1, 0, 1])

Prédiction : tensor([0, 0, 1, 1, 0, 1])

So here are my questions:

-

What makes the BCEloss train so badly even if it is a binary classification problem? And how come all the 6 element of the batch end up quickly toward the same output tensor?

I tried changing the learning rate but without a clear improvement, maybe it’s the optimizer? -

From my understanding, the output in the BCEWithLogitsLoss are the 6 tensors of the batch, and he predicts “normal” if the output tensor is negative and “abnormal” if positive. But they’re stuck in negative so they’re all predicted normal.

Since my goal is to make a “probability of abnormal datscan output” , if this model had a better accuracy I could just use this output tensor in a sigmoid and create a 0 to 1 probability right? -

The output in the CrossEntropyLoss version are 2 x 6 Tensors, representing the “confidence” in being in the left class (so “normal”) or the right class (so “abnormal”), and the higher tensor value is the predicted class. For example:

tensor([[ 14.8245, -11.1206], = predicted normal

[ 4.8293, -2.1229], = predicted normal

[-19.1812, 4.6617], = predicted abnormal

[ -7.9391, 3.3256], = predicted abnormal

[ 30.0683, -26.7278], = predicted normal

[-11.0685, 3.2678]]) = predicted abnormal

However, while it has a better accuracy, the problem is how can I represent these output tensors as “probability of being abnormal”?

Thank you very much for your help, and I look forward to reading your thoughts about it, it’s always extremely interesting!