Hi

the output of my model is just float64, and there are no more than 2m float64 in the list.

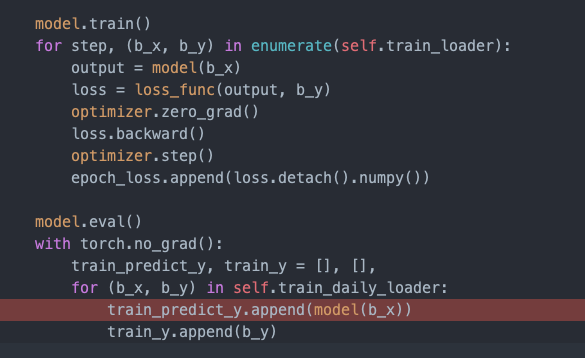

actually,I did try the following code, and the memory is okay which may imply that there is something wrong in the model.

the source code of my naive baby model.

class InceptionV1(nn.Module):

def __init__(self, in_channels, channels_7x7, out_channels):

super(InceptionV1, self).__init__()

self.features = nn.Sequential(

OrderedDict([

# the output is (4*out_channels, H, W)

('inceptionc', InceptionC(in_channels, channels_7x7, out_channels=out_channels)),

# (128, H, W)

('basicConv2d_1x1', BasicConv2d(out_channels*4, 128, kernel_size=1, )),

# (128, 20, 24)

('maxpool', nn.MaxPool2d(kernel_size=2, stride=2)),

# (256, 10, 12)

('basicConv2d_2x2_0', BasicConv2d(128, out_channels=256, kernel_size=2, stride=2)),

# (512, 5, 6)

('basicConv2d_2x2_1', BasicConv2d(256, out_channels=512, kernel_size=2, stride=2)),

# (512, 1, 1)

('avg_pool', nn.AvgPool2d(kernel_size=(5, 6)))

])

)

self.linear = nn.Sequential(

nn.Linear(512, 128),

nn.ReLU(),

nn.Linear(128, 1),

nn.Tanh()

)

def forward(self, *input):

features = self.features(input[0].view(-1, 3, 40, 48))

label = 3 * self.linear(features.view(features.size(0), -1))

return label

class InceptionC(nn.Module):

def __init__(self, in_channels, channels_7x7, out_channels):

super(InceptionC, self).__init__()

self.branch1x1 = BasicConv2d(in_channels, out_channels, kernel_size=1)

c7 = channels_7x7

self.branch7x7 = nn.Sequential(

BasicConv2d(in_channels, c7, kernel_size=1),

BasicConv2d(c7, c7, kernel_size=(1, 7), padding=(0,3)),

BasicConv2d(c7, out_channels, kernel_size=(7,1), padding=(3, 0)))

self.branch7x7dbl = nn.Sequential(

BasicConv2d(in_channels, c7, kernel_size=1),

BasicConv2d(c7, c7, kernel_size=(7,1), padding=(3,0)),

BasicConv2d(c7, c7, kernel_size=(1,7), padding=(0,3)),

BasicConv2d(c7, c7, kernel_size=(7,1), padding=(3,0)),

BasicConv2d(c7, out_channels, kernel_size=(1,7), padding=(0,3))

)

self.branch_pool = BasicConv2d(in_channels, out_channels, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch7x7 = self.branch7x7(x)

branch7x7dbl = self.branch7x7dbl(x)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch7x7, branch7x7dbl, branch_pool]

return torch.cat(outputs, 1)

class BasicConv2d(nn.Module):

def __init__(self, in_channels, out_channels, **kwargs):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, bias=False, **kwargs)

self.bn = nn.BatchNorm2d(out_channels, eps=0.001)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

return F.relu(x, inplace=True)