Hello,

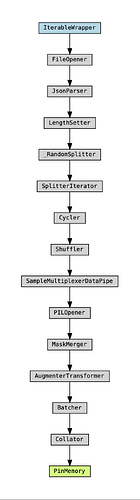

I am currently working on training a segmentation model and have incorporated Torch DataPipe into my pipeline(Please refer the above pipeline graph). I am using Dataloader2 at the end of this pipeline and have observed some peculiar behavior with and without the multiprocessing reading service. I’d appreciate some insights and guidance on the correct usage and potential solutions.

Note: I am using single GPU for training.

TorchData version: 0.6.1

Case 1: With Reading Service

Memory Consumption: I’ve noticed a significant increase in memory consumption at each epoch(For example if the memory occupied in epoch-1 is around 25gb then at the starting of 2nd epoch it gets doubled to more than 50gbs and so on).This hike in the memory occupancy seems concerning to me.

Epoch Completion Time: The time taken for each epoch to complete is approximately 700 seconds.

Case 2: Without Reading Service**

Memory Consumption: The memory stabilizes after a certain point and does not show the same increase as observed in Case 1.(i.e the memory occupied in the first epoch does not doubles up but just remains somewhat constant throughout all epochs)

Epoch Completion Time: The epoch completion time, however, doubles to around 1500 seconds.

Given these observations, I have a few questions:

- Correct Usage of Reading Services with Dataloader2: Is there a recommended or best-practice way to use the reading service with Dataloader2 to ensure efficient memory usage without compromising too much on speed?

- Usage of initialize_iteration() and finalize() Methods: I’ve come across the initialize_iteration() and finalize() methods that can be used at the beginning and end of each epoch, respectively. I am not sure if these could solve the above problem, if yes can someone provide guidance or examples on how to correctly implement these methods in the context of Dataloader2 and Torch DataPipe?

I’d be grateful for any insights, suggestions, or references to relevant documentation or threads that might help address these issues. Thank you in advance for your time and assistance!

Best regards,

Priyanshu