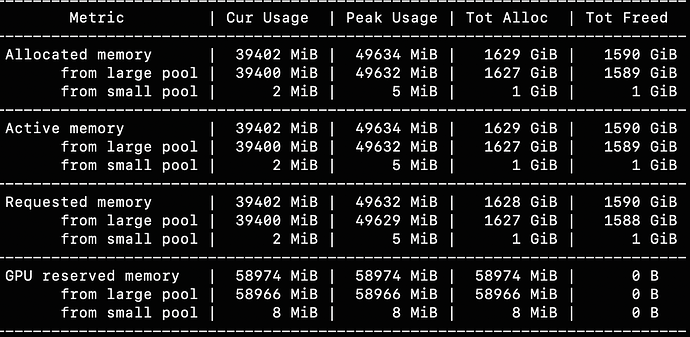

Hello! I wanted to make an inference with codegemma model from huggingface, but when I use model.generate(**inputs) method GPU memory cost increases from 39 GB to 49 GB in peak usage with torch profiler no matter max_token_len number. I understand that we need to save activations of model on inference and context of like 4096 input tokens but I can’t believe that it can increase inference memory usage on 10 GB. Can someone explain me how could it be? Thank you in advance.