Hello,

I am trying to perform Supervised Contrastive Learning according to https://github.com/HobbitLong/SupContrast which was created by one of co-authors of the original paper.

I am trying to use a dataset of my own and input size of images 256x256px (instead of the original 32x32). Hence I set the parameter size of main_supcon.py to 256, put it my dataset and run the script. However, as soon as I start putting batches (of size 64) into the newly created network, I recieve

RuntimeError: CUDA out of memory.

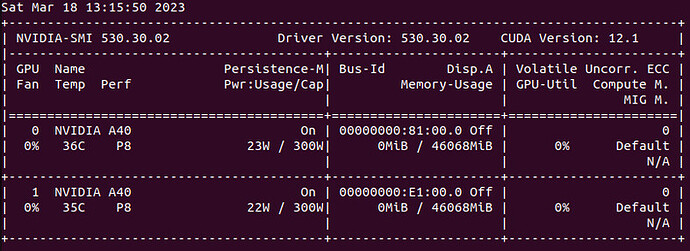

error. This however seems suspicious to me, as I am using a cluster with 2x Nvidia A40 GPUs with total of 90GB VRAM:

and from the source code I got the feeling that it is capable to utilize several GPUs. Nevertheless, even if only one GPU was in use, it would lead aproximately to 2x64x256x256x3 = 25165824B ~= 25. GB of VRAM.

My question hence is: does anyone knows what may cause this out of memory errors and how to fix them?

I am using PyTorch for cuda version 11.8.

Thank you,

Adam

Could you verify if this is indeed the case and if e.g. DistributedDataParallel is used or any model sharding approach?

25165824 bytes will use ~25MB not GB. Assuming this shape represents the model input, you would have to multiple it by 4 since each value would use float32 in the default setup (4 bytes per value).

However, the input is usually using the least amount of memory while the model parameters and the intermediate forward activations, needed for the gradient calculation, might use significantly more memory. Also, the gradients as well as optimizer states (if applicable) will increase the memory usage.