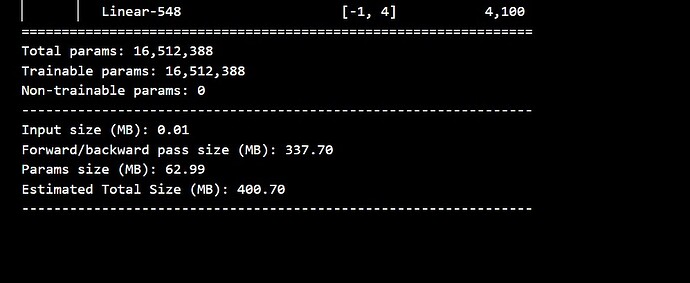

I met a problem about memory when i was training a model. Because the memory of my computer is small, so i monitor the memory use during training. I found that as i began to train, there will be a big increase in memory use. (about 4G increased), but the parameters were only ~0.5G(estimated by torchsummary). So , where does the extra 3.5G come from? I am sure it is not caused by the data loader, so it confused me a lot.

I guess this memory increase could come from the intermediate forward activations which are needed for the gradient computation. You could take a look at this post to get an estimation of the memory usage.