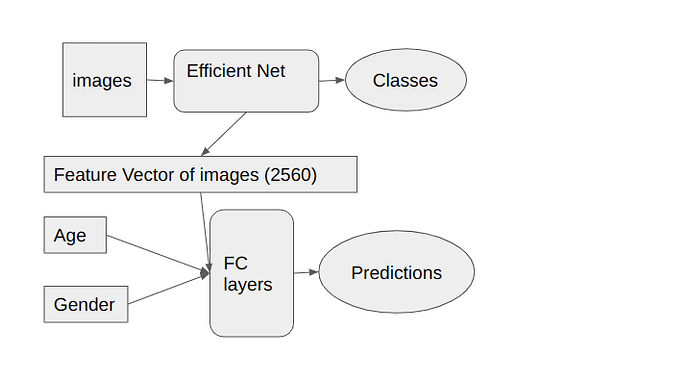

Hi everyone. I needed your help and advice on my problem. I want to do a survival prediction based on CT images and two clinical variables of patients(age and gender). For CT images feature representation I tried to do a classification and I extracted the features before fully connected layers the feature vector for every patient has a size of 2560. So for the second network, I have to give age, gender, and feature vector to do the survival estimation. I don’t know how to merge the feature vector and the two variables. One way can be the concatenation but I think because of the big size of the image vector the age and gender wouldn’t affect the prediction. Another way can be using some fully connected layers to decrease the image feature vector size and then add age and gender to the output of that FC layer and have other FC layers to predict the survival. But the problem is that when we increase the number of FC layers the accuracy decreases. Do you have any idea how it is possible to merge a feature vector and two variables in a way that gets a good result and accuracy?

Hi Maryam!

My intuition suggests that you would want to simply concatenate the feature

vector with the two additional variables and let training of the network sort

out how exactly to mix the values together.

I do see your point that your additional two variables may be overwhelmed

by the 2560 values in your feature vector. However, that’s the basic reality

of it – your CT images contain much more information than the two age and

gender variables. So it makes sense that the feature vector derived from the

CT images should have much more effect on the final predictions than age

and gender.

I also think that you would want to provide your concatenated vector as input

to your second-to-last (or maybe even your third-to-last) fully-connected layer,

rather than as input to your final fully-connected layer. The idea is that you

want age and gender to get mixed nonlinearly with the feature vector by the

nonlinear activation between your second-to-last and final layers. The

nonlinear activations – this nonlinear mixing – are how the inputs to your

network “communicate” with one another and are the key to how neural

networks work.

If your current network only has a single fully-connected layer at the end,

then I would seriously consider adding a second fully-connected layer at

the end so you can get the benefit of the nonlinear activation in between

the two. If you’re concerned that adding the additional layer could degrade

performance – and it might well in the absence of the age and gender

variables – try both with and without the second layer to see whether the

benefits of the nonlinear activation outweigh the cost of the additional

layer.

Best.

K. Frank

Thanks for your reply.

It is true that the CT images have more information than age and gender but it doesn’t have more effect on the output(prediction).

So I was thinking to make gender and age a vector of 2560 by replicating the values and then concatenating them with CT images and give the concatenated vector to the fully connected layers. But I am not sure whether it can be the correct method or not.

Also, I can use age and gender in the middle layers as you told me to use the non-linearity function to compare two ways.

Hi Maryam!

My intuition suggests that replicating the age and gender values (thousands

of times!) is likely to do more harm than good.

My thinking is that you would have all of these variables that are actually

the same and the network would have to “learn” that these variables are

just copies of one another. I believe that it would make more sense to just

concatenate the two values (unreplicated) to the feature vector and let the

final layer(s) “learn” that your concatenated vector of length 2562 consists

of 2560 features and the two additional age and gender values. This is

very much the kind of thing that neural networks can learn.

Of course, if you can afford the training time, you could try it both ways, but

I expect that not replicating will work better.

Best.

K. Frank