I’m trying to run the DCGAN on Imagenet 32x32, but am running into problems.

If I just change the --imageSize to 32, then the convolutional layers break and I get the error RuntimeError: sizes must be non-negative . I changed the kernel size of the final Generator layer to 1 and the kernel size of the final Discriminator layer to 2 (as per the suggestion this related issue on the PyTorch github) but then I get a size mismatch error ValueError: Target and input must have the same number of elements. target nelement (64) != input nelement (256) . I haven’t made any other changes to main.py as I want to establish a baseline model.

What other changes to the parameters/Generator/Discriminator do I need?

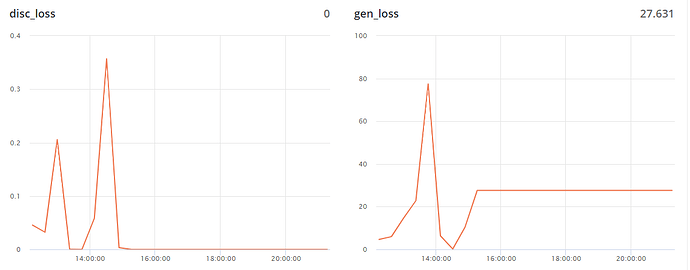

This is a bit unrelated, but I’m also concerned that a full 25 epoch run of the unmodified DCGAN on LSUN (64x64) produced the following result:

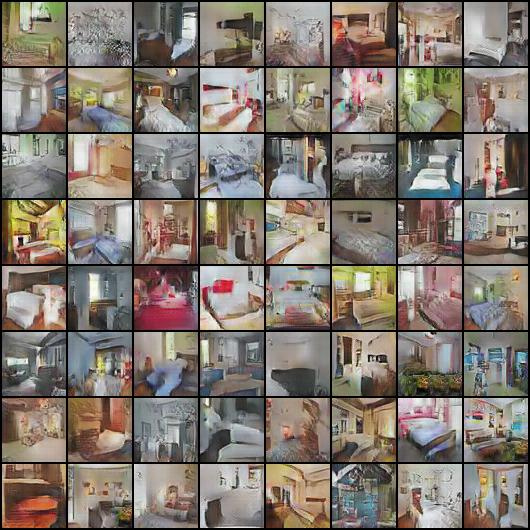

The generator (as per the DCGAN paper) produced passable images in the first few epochs, e.g.

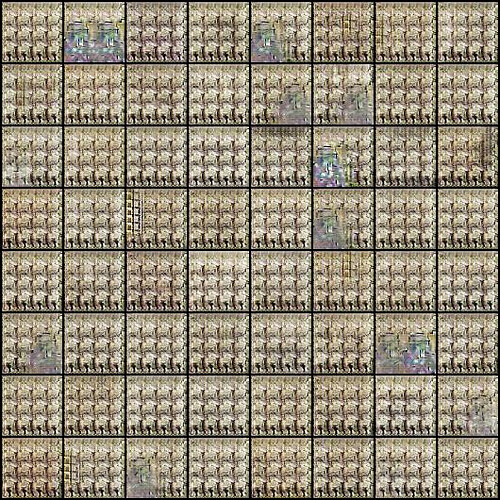

but by the 5th epoch, the generator collapsed completely and could only produce images like this, and never recovered (as per the above loss graphs):

What has gone wrong here? At a loss!