I have a multi-task learning model with two multi classification tasks. One part of the model creates a shared feature representation that is fed into two subnets in parallel. The loss function for each subnet at the moment is cross entropy.

I want to minimize CE in one task and to maximise the cross entropy in one task so the model doesn’t/can’t learn anything about that one task, and then I think the resulting accuracy for that task should be 1/numoflabels i.e no better than random guess

In my network. I have two classifiers as:

class Adversarial(nn.Module):

def __init__(self):

super(Adversarial, self).__init__()

self.cnn = CNN()

self.emotion_classfier = EmotionClassfier()

self.speaker_invariant = SpeakerInvariant()

def forward(self, input):

input = self.cnn(input)

input_emotion = self.emotion_classfier(input)

input_speaker = self.speaker_invariant(input)

"""First confusion is here, how shall handle it here and then how to implemenet this loss function """

return input_stutter, input_speaker

class EmotionClassfier(nn.Module):

def __init__(self):

super(EmotionClassfier, self).__init__()

self.fc1 = nn.Linear(128, 64)

self.fc2 = nn.Linear(64, 5) #5 here is number of emotion classes

self.relu = nn.ReLU()

def forward(self, input):

input = input.view(input.size(0), -1)

input = self.fc1(input)

input = self.relu(input)

input = self.fc2(input)

return input

class SpeakerInvariant(nn.Module):

def __init__(self):

super(SpeakerInvariant, self).__init__()

self.fc1 = nn.Linear(128, 64)

self.fc2 = nn.Linear(64, 11) #11 here is number of speakers/labels

self.relu = nn.ReLU()

def forward(self, input):

input = input.view(input.size(0), -1)

input = self.fc1(input)

input = self.relu(input)

input = self.fc2(input)

return input

and the loss function I want to implement is as follows:

criterion = nn.CrossEntropyLoss() #For EmotionClassfier as well as SpeakerClassfier

loss_motion = criterion(output, emotion_label) #Want to Minimise this loss

loss_speaker = criterion(output, speaker_label)

"""Here I want to maximize the speakerloss,(Suppose there are five speaker labels and I want to learn the speaker independent features)

i.e I want to apply Gradient Reversal for SpeakerInvariant Network"""

loss = loss+emotion + lambda * loss_speaker

Could you please help it out here

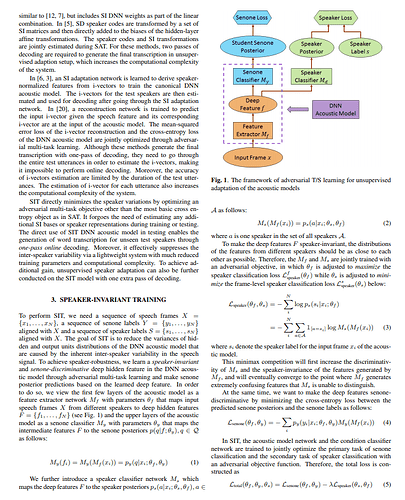

From paper: