Hi,

I am a newby with Pytorch quantization (and Python). I do not understand why my moving average value could be negative whereas my last module is a relu? This issue happen only for the first layer. See print.I believed that probe for moving average was the output of tdnn module.

see forwad of tdnn

x = self.linear_layer(padded_input)

x = self.relu(x.view(mb, -1, self.output_features))

x= self.dequant(x)

tdnn1 (after relu)

(activation_post_process): MovingAverageMinMaxObserver(min_val=-2.8240833282470703, max_val=3.0684268474578857)

tdnn2 (after relu)

(activation_post_process): MovingAverageMinMaxObserver(min_val=0.0, max_val=3.1495800018310547)

Could you post more information about how you are calling the observer and where it is in your model? From your printout we can see the min_val/max_val of the observer, but not how it gets the values.

If I had to guess, the observer is getting values from something other than a relu, but would be good to get more info to prove or disprove that.

Hi Vassiliy,

Thanks for your consideration to my question.

Let me rephrase my question more precisely.

model init

self.quant = torch.quantization.QuantStub()

self.dequant = torch.quantization.DeQuantStub()

model forward

x = self.linear_layer(self.quant(padded_input))

x = self.relu(x.view(mb, -1, self.output_features))

x= self.dequant(x)

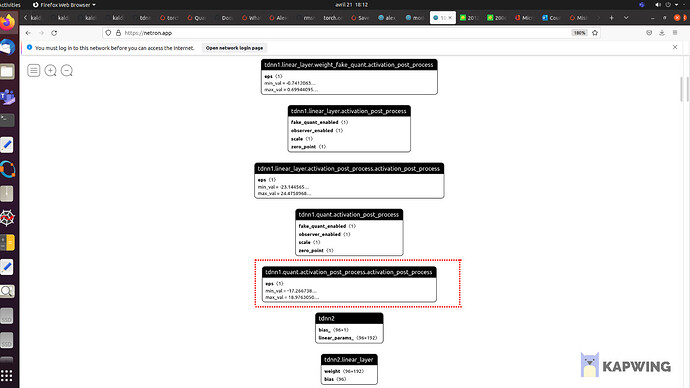

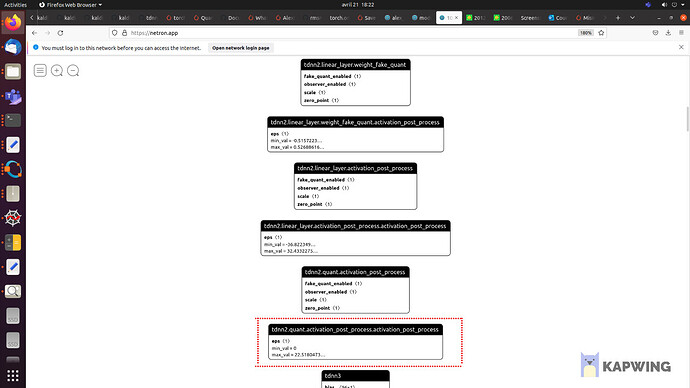

During the qat, when the MovingAverageMinMaxObserver is processed at torch.quantization.QuantStub() or at torch.quantization.DeQuantStub() or both? Regarding, the graphical view from “Netron”, I believed it was at DeQuantStub().

FYI, I run qat thanks to this line : torch.quantization.prepare_qat(model, inplace=True).

See print.

Hi @Thomas_CAMIER , could you post the code for your full example (so that someone else could copy-paste your code and run it)? That would enable us to help better, it’s still not 100% clear from what you’ve posted so far since it does not have the precise information of what your code is doing.

Hi @Vasiliy_Kuznetsov,

I finally resolved my misunderstanding.

Observer is processed at QuantStub (x = self.linear_layer(self.quant(padded_input))).

This is why I have negative value since I quantize input of my “linear-relu” block and not the output. It was obvious but I was confused by the naming “activation_post_process” that is in fact the input in my case …

Thanks for your help

Regards,

Thomas