Yes that is correct. I forgot to mention this but my main error is the following “TypeError: forward() missing 2 required positional arguments: 'cap_lens' and 'hidden' - #2 by Diego”.

There are two cases I’m looking as the root of the problem

(1st Case) Diego from the other post (TypeError: forward() missing 2 required positional arguments: 'cap_lens' and 'hidden' - #2 by Diego) said “I think the problem is, you are using dropout with only one layer. You need at least 2 layers to apply dropout if you are using the LSTM class (torch.nn — PyTorch 2.1 documentation).”

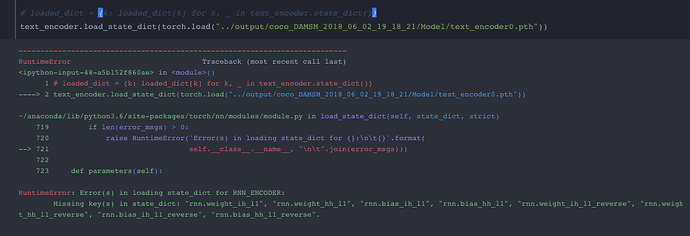

I wasn’t sure if this was a big deal because I was only given a warning when I created one layer and the error doesn’t specify any complaints about it. After adding a second layer I started missing key(s) inside the state_dict. Your solution to initialize a second layer randomly while loading the parameters for the first sounds awesome but I want your opinion on (Case 2) if we really need to solve (Case 1). I’m mostly concern the root of the problem. Sorry for not mentioning (Case 2) until now.

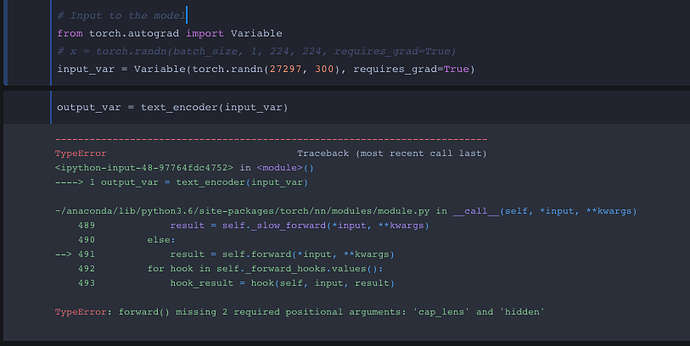

(2nd Error): If I stick with one layer I successfully load the state_dict with no loss of key(s). Only given a warning for creating one layer for an LSTM. However I think the main root of the problem is a lack of arguments passed into text_encoder not having cap_lens and hidden for the forward function. This case is a lot more extreme since I don’t know the true origins or the two variables. I’m using this git repo (GitHub - taoxugit/AttnGAN 1) for cap_lens and the hidden variable. Their located inside AttnGAN/code/pretrain_DAMSM.py @line_65

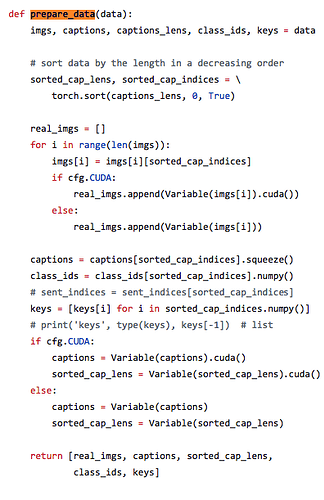

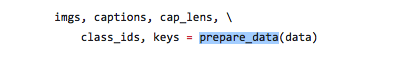

however they were generated data (prepare_data) from the class AttnGAN/code/datasets.py @line_28

I try to replicate prepare_data to create a new cap_lens but I keep ending up with empty content for the data.