Hello together,

can someone confirm, that the server for downloading MNIST dataset is down? I cannot access the dataset by the dataloader. The following message is printed:

Traceback (most recent call last):

File "/opt/conda/lib/python3.8/threading.py", line 932, in _bootstrap_inner

self.run()

File "/opt/conda/lib/python3.8/threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "/app/task_listener.py", line 67, in do_work

response = eval_fitness(arch,args)

File "/app/fitness_evaluator.py", line 101, in eval_fitness

train_dataloader, valid_dataloader = get_train_valid_dataloaders(args,input,kfold,0)

File "/app/utils/dataloader_provider.py", line 354, in get_train_valid_dataloaders

train_ds = INPUT_FUNCTOR[key](root=path.join(".",input), test=False, train=True,download=True,transform=None,kfolds=k, current_fold=current_fold,key=key)

File "/app/utils/dataloader_provider.py", line 37, in __init__

super().__init__(root, train=(not test), transform=transform, target_transform=target_transform, download=download)

File "/opt/conda/lib/python3.8/site-packages/torchvision/datasets/mnist.py", line 79, in __init__

self.download()

File "/opt/conda/lib/python3.8/site-packages/torchvision/datasets/mnist.py", line 146, in download

download_and_extract_archive(url, download_root=self.raw_folder, filename=filename, md5=md5)

File "/opt/conda/lib/python3.8/site-packages/torchvision/datasets/utils.py", line 256, in download_and_extract_archive

download_url(url, download_root, filename, md5)

File "/opt/conda/lib/python3.8/site-packages/torchvision/datasets/utils.py", line 84, in download_url

raise e

File "/opt/conda/lib/python3.8/site-packages/torchvision/datasets/utils.py", line 70, in download_url

urllib.request.urlretrieve(

File "/opt/conda/lib/python3.8/urllib/request.py", line 247, in urlretrieve

with contextlib.closing(urlopen(url, data)) as fp:

File "/opt/conda/lib/python3.8/urllib/request.py", line 222, in urlopen

return opener.open(url, data, timeout)

File "/opt/conda/lib/python3.8/urllib/request.py", line 531, in open

response = meth(req, response)

File "/opt/conda/lib/python3.8/urllib/request.py", line 640, in http_response

response = self.parent.error(

File "/opt/conda/lib/python3.8/urllib/request.py", line 569, in error

return self._call_chain(*args)

File "/opt/conda/lib/python3.8/urllib/request.py", line 502, in _call_chain

result = func(*args)

File "/opt/conda/lib/python3.8/urllib/request.py", line 649, in http_error_default

raise HTTPError(req.full_url, code, msg, hdrs, fp)

urllib.error.HTTPError: HTTP Error 503: Service Unavailable

0it [00:00, ?it/s]

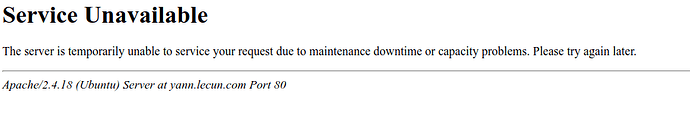

If I try to download from my browser, it also does not work and shows the following:

does someone else face this problem? Is there some information, when the server will be up again?

Best Regards