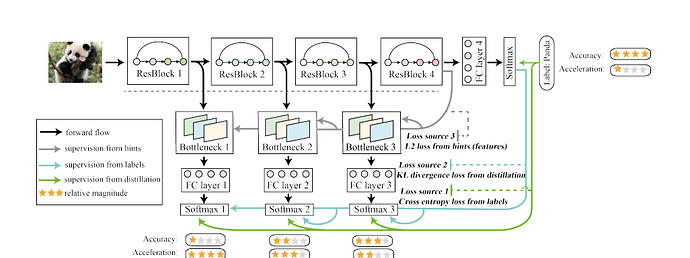

I am implementing a binary classification task with a self distillation based model which has been known to increase accuracy. I have four blocks, I compute a loss between the final predictions and labels and loss between intermediate predictions and final prediction. I am implementing everything except the loss from hints as shown in the below diagram.

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.model_zoo as model_zoo

class ResNet(nn.Module):

def __init__(self, block, layers, num_classes, KaimingInit=False):

self.inplanes = 16

super(ResNet, self).__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(16)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 16, layers[0])

self.layer2 = self._make_layer(block, 32, layers[1], stride=2)

self.layer3 = self._make_layer(block, 64, layers[2], stride=2)

self.layer4 = self._make_layer(block, 128, layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.middle_fc1 = nn.Linear(16* block.expansion, 2)

self.middle_fc2=nn.Linear(32* block.expansion, 2)

self.middle_fc3=nn.Linear(64* block.expansion, 2)

self.classifier = nn.Linear(128 * block.expansion, 2)

if KaimingInit == True:

print('Using Kaiming Initialization.')

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

for m in self.modules():

if isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

# middle_output1 = torch.flatten(middle_output1, 1)

middle_output1=self.avgpool(x).view(x.size()[0], -1)

middle_output1 = self.middle_fc1(middle_output1)

x = self.layer2(x)

middle_output2=self.avgpool(x).view(x.size()[0], -1)

middle_output2 = self.middle_fc2(middle_output2)

x = self.layer3(x)

middle_output3=self.avgpool(x).view(x.size()[0], -1)

middle_output3 = self.middle_fc3(middle_output3)

x = self.layer4(x)

x = self.avgpool(x).view(x.size()[0], -1)

out = self.classifier(x)

return middle_output1,middle_output2,middle_output3,out,x

I tried working with CrossEntropyLoss with two output logits. I also tried working with BCEWithLogitsLoss with one output logit. Both are giving me accuracy for each epoch around 50 %.

import torch

import torchaudio

from torch import nn

from torch.utils.data import DataLoader

import numpy as np

BATCH_SIZE = 128

EPOCHS = 20

LEARNING_RATE = 0.001

softmax = nn.Softmax(dim=1)

def create_data_loader(train_data, batch_size):

train_dataloader = DataLoader(train_data, batch_size=batch_size)

return train_dataloader

def kd_loss_function(output, target_output):

temperature=3

output = output / temperature

output_log_softmax = torch.log_softmax(output, dim=1)

loss_kd = -torch.mean(torch.sum(output_log_softmax * target_output, dim=1))

return loss_kd

def train_single_epoch(model, data_loader, loss_fn, optimiser, device):

temperature=3

alpha=0.1

correct=0

total=0

for input, target,domain in data_loader:

input = input.to(device)

# label = target.clone().to(device)

# target = torch.tensor(target, dtype=torch.float).to(device)

target=target.to(device)

doamin=domain.to(device)

# calculate loss

middle_output1,middle_output2,middle_output3,prediction,features = model(input)

temp4 = prediction / temperature

temp4 = torch.softmax(temp4, dim=1)

probabilities = softmax(prediction)

predicted_classes = torch.argmax(probabilities, dim=1)

correct += (predicted_classes == target).sum().item()

total += target.size(0)

loss1by4 = kd_loss_function(middle_output1, temp4.detach())* (temperature**2)

loss2by4 = kd_loss_function(middle_output2, temp4.detach()) * (temperature**2)

loss3by4 = kd_loss_function(middle_output3, temp4.detach()) * (temperature**2)

loss = loss_fn(prediction, target)

total_loss = (1 - alpha) * (loss1by4 + loss2by4 + loss3by4) + alpha *loss

optimiser.zero_grad()

total_loss.backward()

optimiser.step()

print(f"loss: {loss.item()}")

# accuracy = 100 * correct / 30000

accuracy = correct / total

print(" Accuracy = {}".format(accuracy))

def train(model, data_loader, loss_fn, optimiser, device, epochs):

model.train()

for i in range(epochs):

print(f"Epoch {i+1}")

features,domains,labels=train_single_epoch(model, data_loader, loss_fn, optimiser, device)

print("---------------------------")

print("Finished training")

model = eca_resnet18().to(device)

loss_fn = torch.nn.CrossEntropyLoss()

optimiser = torch.optim.Adam(model.parameters(),

lr=LEARNING_RATE)

train(model, train_dataloader, loss_fn, optimiser, device, EPOCHS)