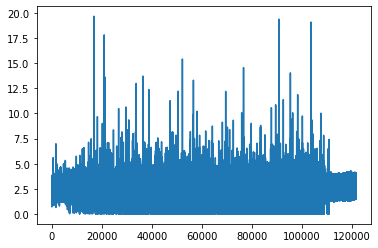

I’m trying to use transfer learning to classify facial emotions on the FER2013 dataset from kaggle. I resized the images to 224x224 and converted them to 3 channel grayscale images. While training, there seems to be no progress at all and the loss function graph doesn’t look right either. Is there anything wrong I’m doing? (I’m relatively new to deep learning)

model = torchvision.models.vgg16(pretrained=True)

#model summary

print(model)

# freezing parameters for pretrained VGG16

for params in model.parameters():

params.require_grad = False

#number of features in penultimate classifier layer

num_feature = model.classifier[6].in_features

model.classifier[6] = nn.Sequential(

nn.Linear(num_feature, 256), nn.ReLU(), nn.Dropout(0.2),

nn.Linear(256, 7))

for params in model.classifier.parameters():

params.require_grad = True

model = model.to(device)

print(model)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters())

epochs = 10

loss_log = 100

LOSS = []

for epoch in range(epochs):

epoch_correct = 0

print(f"Epoch: {epoch+1}/{epochs}")

for idx,(x,label) in enumerate(x_train_data):

x = x.to(device)

label = label.to(device)

optimizer.zero_grad()

out = model(x)

loss = criterion(out,label)

if (idx+1)%loss_log == 0:

print(f"log loss at {idx}: {loss}")

LOSS.append(loss)

loss.backward()

optimizer.step()

Here’s the graph: