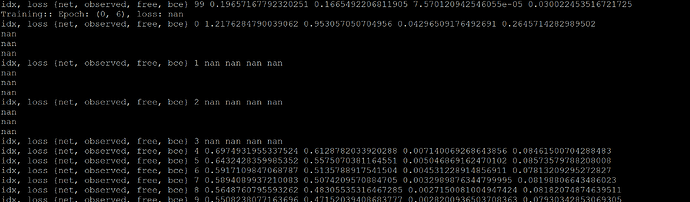

I have a pytorch model which outputs nan after few epochs. There are no nan in the input as well as no logs or divisions in the loss that can make nan. On debugging I found that the last two layers of the model outputs the nan at some places. However, it automatically resolves after few epochs and then again nan after few epochs.

What could be the potential reason for this. My loss function consists of regression ad cross entropy-loss. For regression loss, I am calculating the the L1 loss only at places where target values are less than 1 where as cross-entropy loss over whole grid by converting target and output of models as occupancy grid (values less than 1 as 1 and greater than 1 as 0).