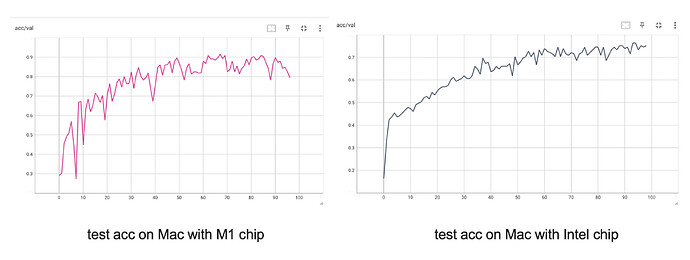

I ran the same code (with the same random seed) for training a resnet18 model for image classification on one Macbook with Intel chip and one Macbook with M1 chip, but got very different performance in terms of loss and accuracy. For example, the model achieved the best accuracy of 94% on the M1 chip but only obtained the best accuracy of 79% on the intel chip. The initial model parameters are exactly same on these two devices. Software (e.g. PyTorch, Python) versions are also same on two devices. Does anyone know what is causing this?