- I created a CNN model for the implementation of a paper to remove the atmospheric turbulence. The model code is as follow

class Net(torch.nn.Module):

_kernel_size = 5

_stride=1

_padding=2

_outChannels=64

_inChannels = 64

def __init__(self):

super(Net, self).__init__()

self.cnn_Inputlayer = Conv2d(3, self._outChannels, kernel_size=self._kernel_size, stride=self._stride, padding=self._padding)

self.relu = ReLU(inplace=True)

self.cnn_layers = Conv2d(self._inChannels, self._outChannels, kernel_size=self._kernel_size, stride=self._stride, padding=self._padding)

self.batch = BatchNorm2d(64)

self.cnn_OutputLayer = Conv2d(self._inChannels, 3, kernel_size=self._kernel_size, stride=self._stride, padding=self._padding)

# Defining the forward pass

def forward(self, x):

x = self.relu(self.cnn_Inputlayer(x))

# for i in range(1,15):

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.relu(self.batch(self.cnn_layers(x)))

x = self.cnn_OutputLayer(x)

return x

When I print model I get as

Net(

(cnn_Inputlayer): Conv2d(3, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(relu): ReLU(inplace=True)

(cnn_layers): Conv2d(64, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(batch): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(cnn_OutputLayer): Conv2d(64, 3, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

)

Why I do not get the full model with 17 layers. I can see only three layers Input, CNN and Output. There should be 15 CNN layers.

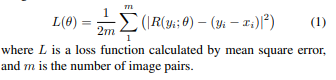

- For this I also have to find the loss function as shown below in the image. Is this can be calculated using simple MSE or I have to implement custom. If I have to implement custom, how can I?

The Paper I am implementing is here