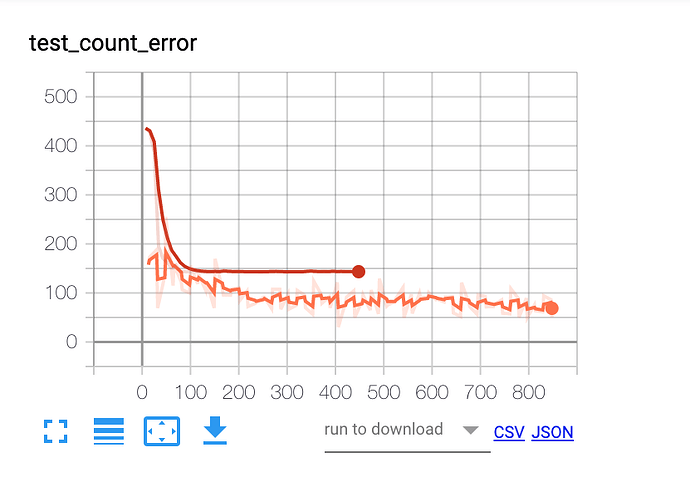

I have two models both run using the same parameters (Epoch=50, Batch size=16) If I compare both models one seem to run for 965 steps, and the other runs for 395. I would expect them both to run similar steps. Any idea why would this be the case. I have attached a copy of the graph?

Is the model clever enough to stop as training value isn’t changing any more?The runtime difference might come from:

- different datasets and thus a different number of samples

- different print logic in your code

- different training (including early stopping)

- …

Without seeing code I can just speculate.

1 Like

@ptrblck Many Thanks once more for your help. The points you mentioned help to pin point the issue. Thanks a lot.