How can i adjust the weights in a model with two outputs like this?

This is how I implemented it:

class UNet(nn.Module):

def __init__(self):

super(UNet, self).__init__()

self.down = ConvNetContractingPath()

self.up = ConvNetExpansivePath()

self.shuffle_layer = nn.Sequential(

nn.Conv2d(in_channels=16, out_channels=16, kernel_size=1),

nn.BatchNorm2d(16)

)

self.rotation_layer = nn.Sequential(

nn.MaxPool2d(2, stride=2),

nn.Conv2d(in_channels=16, out_channels=4, kernel_size=28, stride=28),

nn.BatchNorm2d(4)

)

def forward(self, x):

conv5, conv4, conv3, conv2, conv1 = self.down(x)

up_conv9 = self.up(conv5, conv4, conv3, conv2, conv1)

shuffle_output = self.shuffle_layer(up_conv9)

rotation_output = self.rotation_layer(up_conv9)

return shuffle_output, rotation_output

I tried to use this method: A model with multiple outputs - #2 by ptrblck

Calculate the error of each prediction, then sum them up and optimize the model by the sum of the losses. But after 100 epochs the loss value remained almost unchanged.

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.0001)

scheduler = ReduceLROnPlateau(optimizer, mode='min', factor=0.5, patience=15, threshold=0.00001)

for epoch in range(num_epochs):

print(f'Epoch: {epoch+1}/{num_epochs}:')

for i, (images, shfl_labels, rot_labels) in enumerate(train_loader):

# Origin shape: image [batch size, 3, 224, 224]

# shfl_labels [batch size, 224, 224]

# rot_labels [batch size, 4, 4]

# Load data in GPU

images = images.to(device)

shfl_labels = shfl_labels.to(device)

rot_labels = rot_labels.to(device)

# Forward pass

shfl_output, rot_output = model(images)

loss_shfl = criterion(shfl_output, shfl_labels.long())

loss_rot = criterion(rot_output, rot_labels.long())

loss = loss_shfl + loss_rot

#Backward pass and optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (i+1) % 25 == 0:

print(f'\t Step: {i+1}/{n_total_steps}, Train_loss: {loss.item():.4f}')

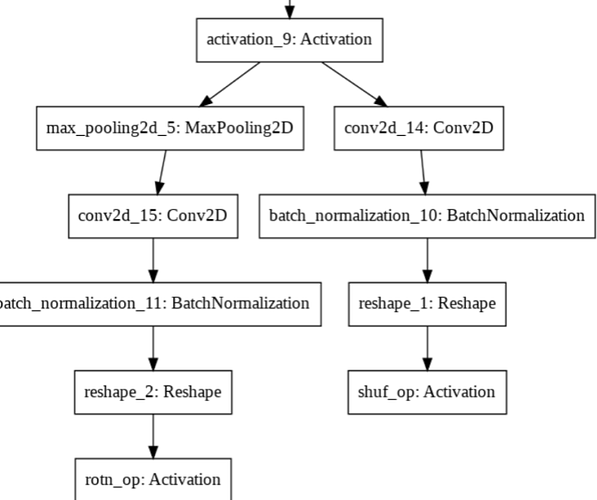

I don’t understand how to optimize the weights of conv2d_15 and conv2d_14 by the amount of losses , since conv2d_15 and conv2d_14 only affect one output?

Maby I should use two optimizers instead of one?