I am running inference using mmdetection (https://github.com/open-mmlab/mmdetection) and I get the above error for this piece of code;

model = init_detector("faster_rcnn_r50_fpn_1x.py", "faster_rcnn_r50_fpn_1x_20181010-3d1b3351.pth", device='cuda:0')

img = 'intersection_unlabeled/frame0.jpg'

result = inference_detector(model, img)

show_result_pyplot(img, result, model.CLASSES)

And the full error log is:

Traceback (most recent call last):

File "C:/Users/sarim/PycharmProjects/thesis/pytorch_learning.py", line 355, in <module>

test()

File "C:/Users/sarim/PycharmProjects/thesis/pytorch_learning.py", line 338, in test

model = init_detector("faster_rcnn_r50_fpn_1x.py", "faster_rcnn_r50_fpn_1x_20181010-3d1b3351.pth", device='cuda:0')

File "C:\Users\sarim\PycharmProjects\thesis\mmdetection\mmdet\apis\inference.py", line 36, in init_detector

checkpoint = load_checkpoint(model, checkpoint)

File "c:\users\sarim\appdata\local\programs\python\python37\lib\site-packages\mmcv\runner\checkpoint.py", line 188, in load_checkpoint

load_state_dict(model, state_dict, strict, logger)

File "c:\users\sarim\appdata\local\programs\python\python37\lib\site-packages\mmcv\runner\checkpoint.py", line 96, in load_state_dict

rank, _ = get_dist_info()

File "c:\users\sarim\appdata\local\programs\python\python37\lib\site-packages\mmcv\runner\utils.py", line 21, in get_dist_info

initialized = dist.is_initialized()

AttributeError: module 'torch.distributed' has no attribute 'is_initialized'

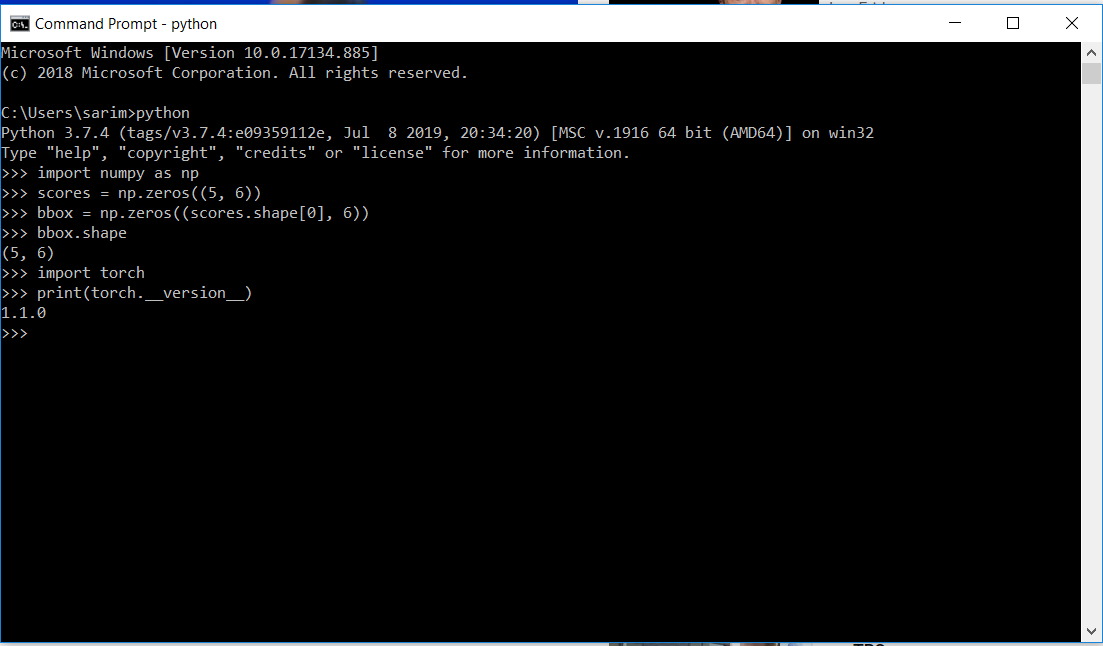

My pytorch version is 1.1.0