Hi,

I was following this tutorial by PyTorch on DDP.

And I encountered a logging error in the HPC. The code written is the same as the one in the github example here: examples/distributed/ddp-tutorial-series/multigpu.py at main · pytorch/examples · GitHub

The code was submitted to the HPC using sbatch and the following configuration

#!/bin/bash

#SBATCH --job-name=testing # Job name

#SBATCH --output=output.log # Output file name

#SBATCH --error=error.log # Error file name

#SBATCH --partition=gpu # Partition

#SBATCH --gres=gpu:4 # GPU resources, requesting 4 GPUs

#SBATCH --ntasks=1 # Number of tasks or processes

#SBATCH --nodes=1 # Number of nodes

# Load necessary modules or activate virtual environment

module load cuda-toolkit/11.6.2

module load python/3.8.6

# Actual command to be executed

python torch_testing.py

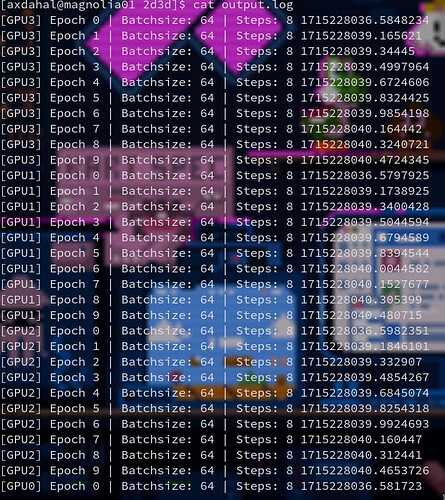

but the problem arises in the output.log where the mp.spawn() seems to run all the epochs in one gpu before moving into the other gpu

[GPU2] Epoch 0 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 1 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 2 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 3 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 4 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 5 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 6 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 7 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 8 | Batchsize: 64 | Steps: 8

[GPU2] Epoch 9 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 0 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 1 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 2 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 3 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 4 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 5 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 6 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 7 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 8 | Batchsize: 64 | Steps: 8

[GPU3] Epoch 9 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 0 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 1 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 2 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 3 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 4 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 5 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 6 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 7 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 8 | Batchsize: 64 | Steps: 8

[GPU1] Epoch 9 | Batchsize: 64 | Steps: 8

[GPU0] Epoch 0 | Batchsize: 64 | Steps: 8

Epoch 0 | Training checkpoint saved at checkpoint.pt

[GPU0] Epoch 1 | Batchsize: 64 | Steps: 8

[GPU0] Epoch 2 | Batchsize: 64 | Steps: 8

Epoch 2 | Training checkpoint saved at checkpoint.pt

[GPU0] Epoch 3 | Batchsize: 64 | Steps: 8

[GPU0] Epoch 4 | Batchsize: 64 | Steps: 8

Epoch 4 | Training checkpoint saved at checkpoint.pt

[GPU0] Epoch 5 | Batchsize: 64 | Steps: 8

[GPU0] Epoch 6 | Batchsize: 64 | Steps: 8

Epoch 6 | Training checkpoint saved at checkpoint.pt

[GPU0] Epoch 7 | Batchsize: 64 | Steps: 8

[GPU0] Epoch 8 | Batchsize: 64 | Steps: 8

Epoch 8 | Training checkpoint saved at checkpoint.pt

[GPU0] Epoch 9 | Batchsize: 64 | Steps: 8

I set the code from the github to save after every 2 epochs and run for a total of 10 epochs.

Any insight on how and why the mp.spawn() behaves this way or is there a way to fix this in the hpc would be greatly appreciated.