I have MSE loss that is computed between ground truth image and the generated image.

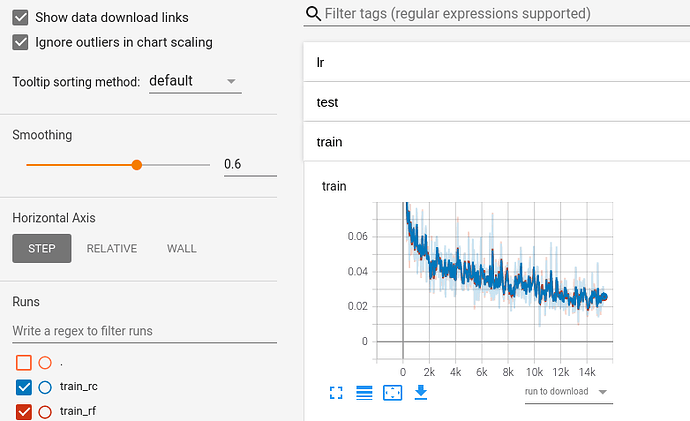

The loss is decreasing/converging but very slowlly(below image). Any suggestions in terms of tweaking the optimizer? I am currently using adam optimizer with lr=1e-5.

The reason for your model converging so slowly is because of your leaning rate (1e-5 == 0.000001), play around with your learning rate. I find default works fine for most cases.

try: 1e-2

or you can use a learning rate that changes over time as discussed here

I tried a higher learning rate than 1e-5, which leads to a gradient explosion. if you observe up to 2k iterations the rate of decrease of error is pretty good but after that, the rate of decrease slows down, and towards 10k+ iterations it almost dead and not decreasing at all.

Yeah, I will try adapting the learning rate. Is there any guide on how to adapt?

Looking at the plot again, your model looks to be about 97-98% accurate. Is that correct?

I’m not aware of any guides that give a comprehensive overview, but you should find other discussion boards that explore this topic, such as the link in my previous reply.

Hey @aswamy,

The replies from @knoriy explains your situation better and is something that you should try out first. In case you need something extra, you could look into the learning rate schedulers.

-

Beginner friendly article on it.

-

PyTorch documentation (Scroll to “How to adjust learning rate” header)

yeah, Correct! Thanks!

Without knowing what your task is, I would say that would be considered close to the state of the art.

Once your model gets close to these figures, in my experience the model finds it hard to find new feature to optimise without overfitting to your dataset. Some reading materials

You may also want to learn about non-global minimum traps.