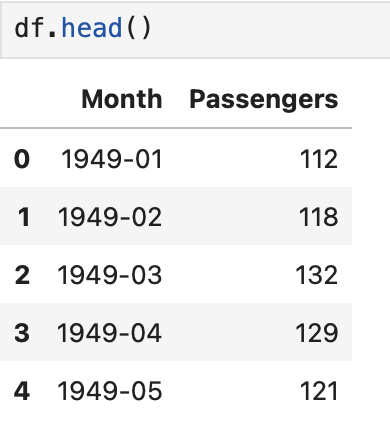

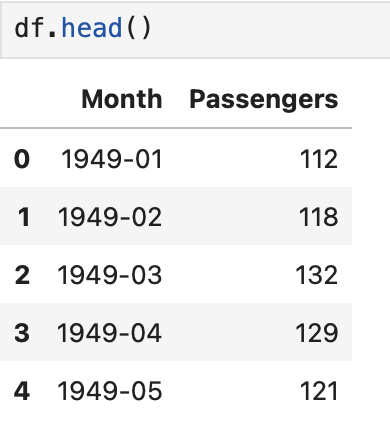

Hello, Working with Pytorch since a few days, I still have difficulties with alignment of lstm output and linear layer input. Here the airflight passengers case and df.head() outlook.

Then coding:

lenght = len(timeseries)

ratio = 0.70

lenght_train = int(lenght * ratio)

train = timeseries[0:lenght_train]

test = timeseries[lenght_train:]

scaler = StandardScaler()

timeseries = scaler.fit_transform(timeseries)

train = scaler.fit_transform(train)

test = scaler.fit_transform(test)

seq_length = 4

def create_dataset(dataset, seq_length):

'transform timeseries into prediction dataset'

'arguments:'

'1. dataset: numpy array which first dimension is time step'

'2. lookbach: size of window to make predictions'

X= []

y = []

for i in range(len(dataset) - seq_length - 1):

feature = dataset[i: i + seq_length]

target = dataset[i + seq_length]

X.append(feature)

y.append(target)

#print(X)

#print(y)

print('len(X): {}'.format(len(X)))

print('len(y): {}'.format(len(y)))

X = np.asarray(X)

y = np.asarray(y)

print('X array.shape: {}'.format(X.shape))

print('y array.shape: {}'.format(y.shape))

return X, y

X_ts, y_ts = create_dataset(timeseries, seq_length)

print('X_ts.shape: {}, y_ts.shape: {}'.format(X_ts.shape, y_ts.shape))

len(X): 139

len(y): 139

X array.shape: (139, 4, 1)

y array.shape: (139, 1)

X_ts.shape: (139, 4, 1), y_ts.shape: (139, 1)

X_train, y_train = create_dataset(train, seq_length)

print('X_train.shape: {}, y_train.shape: {}'.format(X_train.shape, y_train.shape))

len(X): 95

len(y): 95

X array.shape: (95, 4, 1)

y array.shape: (95, 1)

X_train.shape: (95, 4, 1), y_train.shape: (95, 1)

X_test, y_test = create_dataset(test, seq_length)

X_ts = Variable(torch.Tensor(X_ts))

y_ts = Variable(torch.Tensor(y_ts))

X_train = Variable(torch.Tensor(X_train))

y_train = Variable(torch.Tensor(y_train))

X_test = Variable(torch.Tensor(X_test))

y_test = Variable(torch.Tensor(y_test))```

LSTM model looks the following:

```input_size = 1

hidden_size_1 = 128

hidden_size_2 = 64

n_epochs = 200

counter = 0

best_loss = 100

class lstm_airflight(nn.Module):

def __init__(self):

super().__init__()

self.lstm_1 = nn.LSTM(input_size=input_size, hidden_size=hidden_size_1, num_layers=1, batch_first=True)

self.dropout_1 = nn.Dropout(0.2)

self.lstm_2 = nn.LSTM(input_size=hidden_size_1, hidden_size=hidden_size_2, num_layers=1, batch_first=True)

self.flat = nn.Flatten()

# Linear layer expects dimensions to be batch, hidden_size

in_features = hidden_size_2

out_features = 32

self.linear_1 = nn.Linear(in_features=in_features, out_features=out_features)

self.dropout_2 = nn.Dropout(0.2)

self.relu_1 = nn.ReLU()

in_features = out_features

out_features = 16

self.linear_2 = nn.Linear(in_features=in_features, out_features=out_features)

self.dropout_3 = nn.Dropout(0.2)

self.relu_2 = nn.ReLU()

in_features = out_features

out_features = 1

self.linear_3 = nn.Linear(in_features=in_features, out_features=out_features)

def forward(self, x):

x, notused = self.lstm_1(x)

#print('lstm_1_output shape: {}, lstm_1_notused shape: {}'.format(x.shape, len(notused)))

x = self.dropout_1(x)

x, _ = self.lstm_2(x)

#print('lstm_2_output shape: {}, lstm_2_notused shape: {}'.format(x.shape, len(notused)))

x = self.flat(x)

x = self.linear_1(x)

#print('linear_1_output shape: {}'.format(x.shape))

x = self.dropout_2(x)

x = self.relu_1(x)

x = self.linear_2(x)

#print('linear_2_output shape: {}'.format(x.shape))

x = self.dropout_3(x)

x = self.relu_2(x)

#print('ReLU_2_output shape: {}'.format(x.shape))

x = self.linear_3(x)

#print('linear_2_output shape: {}'.format(x.shape))

return x```

```model = lstm_airflight()

print(model)```

Printing model output:

```lstm_airflight(

(lstm_1): LSTM(1, 128, batch_first=True)

(dropout_1): Dropout(p=0.2, inplace=False)

(lstm_2): LSTM(128, 64, batch_first=True)

(flat): Flatten(start_dim=1, end_dim=-1)

(linear_1): Linear(in_features=64, out_features=32, bias=True)

(dropout_2): Dropout(p=0.2, inplace=False)

(relu_1): ReLU()

(linear_2): Linear(in_features=32, out_features=16, bias=True)

(dropout_3): Dropout(p=0.2, inplace=False)

(relu_2): ReLU()

(linear_3): Linear(in_features=16, out_features=1, bias=True)

)```

Loss & optimizer:

```optimizer = Adam(model.parameters())

loss_fn = nn.MSELoss()```

Training:

```l_train = []

l_test = []

d_train = {}

d_test = {}

l_y_pred_tr = []

l_y_pred_t = []

#best_loss = 100

nb_loop = 0

path = '/Users/olivierdebeyssac/Python_LSTM/best_model.pt'

for epoch in range(n_epochs):

model.train()

for X, y in zip(X_train, y_train):

nb_loop = nb_loop + 1

y_pred = model(X)

if nb_loop < 2:

print('nb_loop: {}'.format(nb_loop))

print('===========')

print('nb_loop: {}'.format(nb_loop))

print('X.shape: {}'.format(X.shape))

print('y_pred.shape: {}, y: {}'.format(y_pred.shape, y.shape))

print('===========')

loss_train = loss_fn (y_pred, y)

l_train.append(loss_train.detach().numpy())

optimizer.zero_grad()

loss_train.backward()

optimizer.step()

d_train[epoch] = np.mean(l_train)

model.eval()

for X, y in zip(X_test, y_test):

with torch.no_grad():

y_pred = model(X)

loss_test = loss_fn(y_pred, y)

l_test.append(loss_test.numpy())

d_test[epoch] = np.mean(l_test)

Printing training output:

nb_loop: 1

nb_loop: 1

X.shape: torch.Size([4, 1])

y_pred.shape: torch.Size([4, 1]), y: torch.Size([1])

/Library/Frameworks/Python.framework/Versions/3.12/lib/python3.12/site-packages/torch/nn/modules/loss.py:535: UserWarning:

Using a target size (torch.Size([1])) that is different to the input size (torch.Size([4, 1])). This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size.

I see that MSE loss function get y_pred which shape is [4, 1] and y shape [1], two dimensions tensor vs. one dimension tensor. So, y_pred is 4 values while y is only one.

I was expecting y_pred to be only one value (even in two dimensions).

How can I solve that problem ?

Many thanks for advise and help !