When training with 2 GPUs, it always says segmentation fault (core dumped), and the accuracy of train is much lower than one GPU. But when training with one GPU , no error, and the accuracy is higher.

My multi-GPU code is like this:

original_model = models.resnet50(pretrained = True)

model = resnet50(pretrained = False)

model = nn.DataParallel(model, device_ids=[0, 1])

load_state_dict(model,original_model)

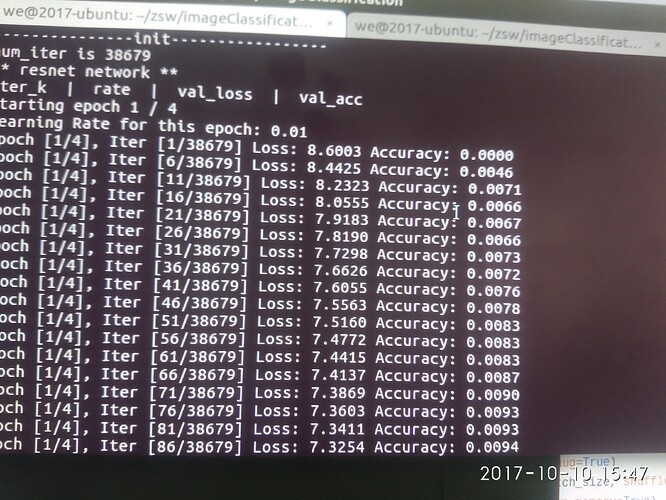

two GPUs, the accuracy is

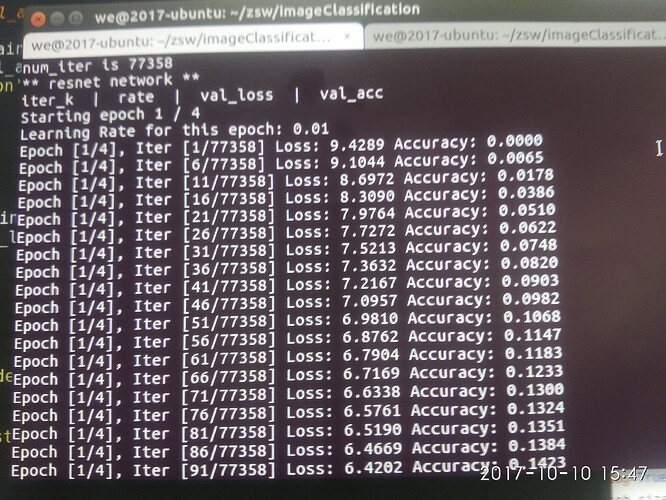

one GPU ,the accuracy is

And then I tried other way to use multi-gpu:

os.environ["CUDA_VISIBLE_DEVICES"] = '0,1'

But only one gpu works.

I then tried one gpu without pretrained model, I got the similar accuracy with two gpus. It seems that the pretrained model (transfer learning) doesn’t work.

I am new to pytorch, hoping anyone to give some advice.

Thanks!