Hi,

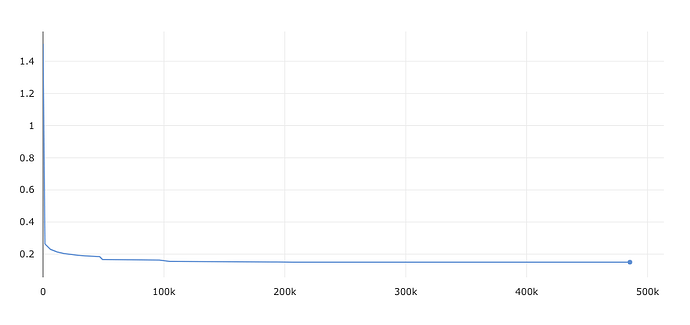

I am training a multi-class multi-label model with torch.nn.BCEWithLogitsLoss() on 8M data points for 10 epochs. I have 54 classes and a single image can have multiple labels. During training the loss decreases nice and decreasing:

However, when I look at trained my model outputs for the last epoch, I see that the model is outputing negative values only. For example, for one sample with the following label:

target =

tensor([[0., 0., 1., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.,

0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 1., 0., 0., 0., 0., 0., 0., 1., 0., 1., 0., 0., 0., 0., 0.]])

I get the following output from the model:

output =

tensor([[-1.2380, -2.3283, -2.3025, -2.1275, -2.1020, -2.3684, -3.4669, -3.4503,

-2.1905, -1.8565, -3.4215, -3.5318, -3.5715, -4.3836, -4.5215, -6.2270,

-3.8660, -3.7280, -4.6043, -4.7601, -9.5219, -9.4969, -9.4392, -8.0596,

-6.0773, -5.7972, -4.2495, -4.4533, -4.2641, -4.1068, -4.9987, -4.9321,

-7.9726, -7.4475, -4.8016, -5.6634, -6.3762, -6.0103, -6.7561, -3.3259,

-3.8778, -6.7682, -6.5663, -4.0945, -3.0747, -5.5408, -5.6429, -5.9659,

-5.8574, -7.6435, -7.8895, -6.6514, -6.5506, -5.0583]],

device='cuda:0')

So if I do sigmoid on top of this, I won’t get any good prediction.