I want to perform the multi-label regression. The idea is to just detect the presence of class objects in an input image. Each 224x224 sized image contains multiple objects from a total of six different classes. The label is a normalized count for each class i.e. class_i_instances_per_image / sum(all_instances_per_image) = 3/12 = 0.25 where i ∈ [0, 5]

target = tensor([[0.0588, 0.8235, 0.0882, 0.0000, 0.0000, 0.0294]], device='cuda:0', dtype=torch.float64)

Output from the last FC layer:

tensor([[-0.0649, 0.0636, -0.0299, -0.0651, -0.8145, -0.3030]], device='cuda:0', grad_fn=<AddmmBackward>)

Prediction from the F.softmax() :

output = tensor([[0.1841, 0.2093, 0.1906, 0.1840, 0.0870, 0.1451]], device='cuda:0', grad_fn=<SoftmaxBackward>)

Computation of loss using the above tensors results into:

criterion = torch.nn.CrossEntropyLoss().cuda()

loss = criterion(torch.nn.functional.softmax(output), target)

RuntimeError: Expected object of scalar type Long but got scalar type Double for argument #2 ‘target’ in call to _thnn_nll_loss_forward

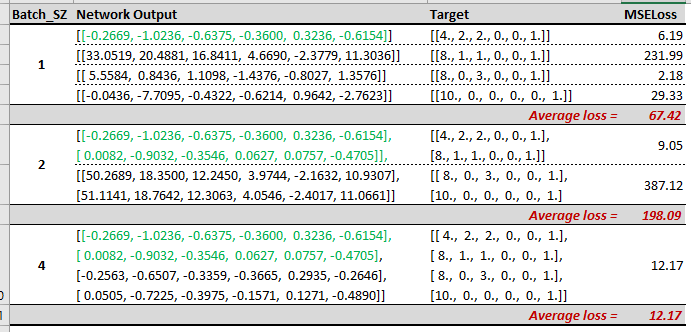

The suitable loss functions for regression problems are mean square error (MSE) or mean absolute error (MAE) . But using F.mse_loss() takes much time and returns inf values.

I will highly appreciate your comments/suggestions to make use of these soft-labels.