Hello,

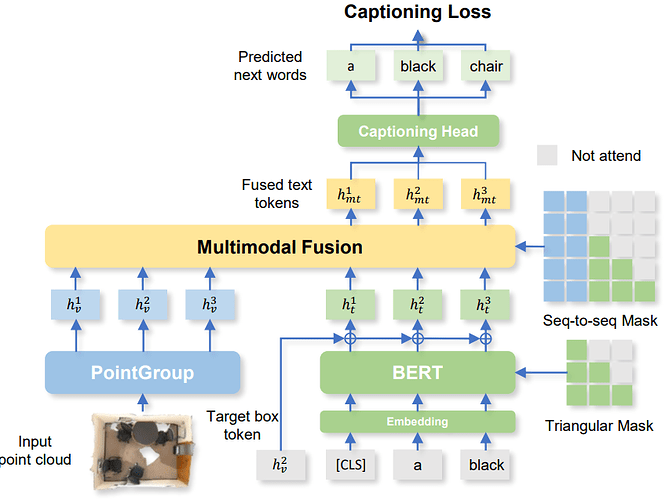

I would like to implement the “Multimodal Fusion” block from the following approach :

It is about image captioning for a 3D scene, and training is performed with next token prediction.

As you can see, the Multimodal Fusion takes as input visual embeddings (blue) and textual embeddings (green). Textual embeddings are then updated with the Multimodal Fusion module taking into account visual embeddings when computing attentions.

I’m very new to multimodal attention, so my question is : how to implement this module ? Taking into account the fact that the number of input tokens may change ?

Does the code exist? If so, where can I find it?

Thank you so much in advance for your help !