Actually, it seems I have managed to resolve the issue.

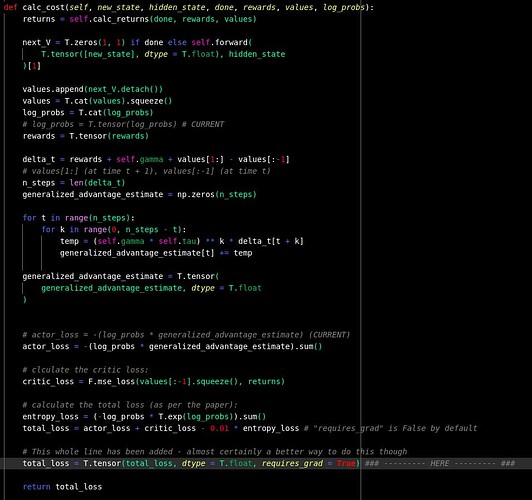

I don’t know why I didn’t try this sooner - since the error said: “No grad_fn for non-leaf saved tensor”. I just went in to the function that computes the loss and added “requires_grad=True” to the actual loss tensor, prior to computing loss.backward(). This seems to have fixed my issue, see below for the fix - at the “HERE” comment:

Many thanks to anyone who took the time to read my original query.

I welcome any suggestions for improvements.

Thanks,

Merlin