Hello,

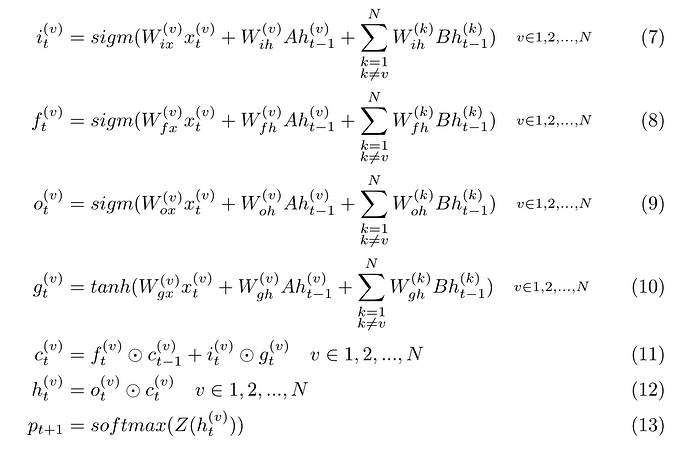

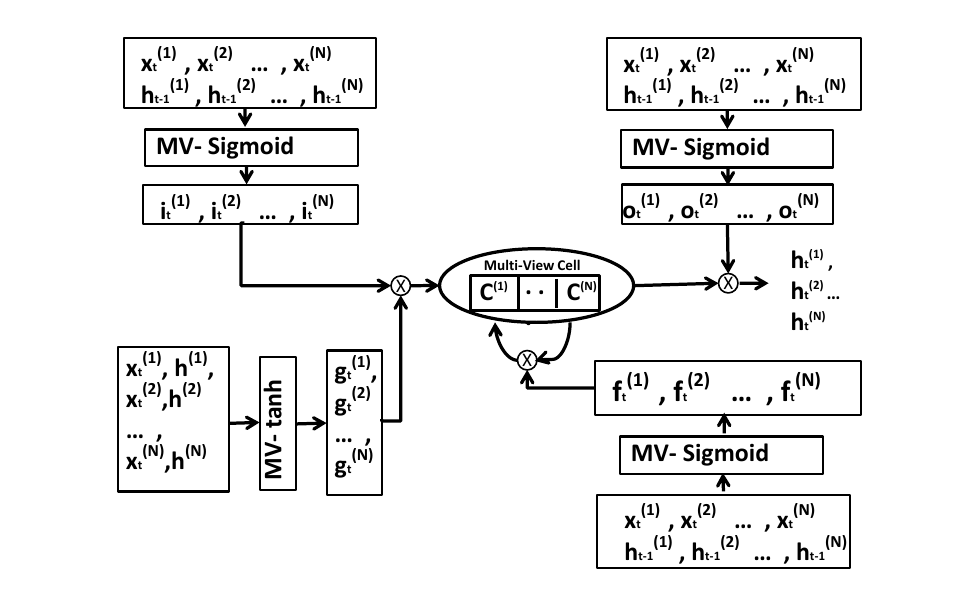

I want to implement the multi-view lstm of," Extending Long Short-Term Memory for Multi-View Structured Learning." The images of mv-lstm’s architecture and equations have been attached. This architecture uses the hidden output of all the views (e.g. In an emotion recognition system which uses lip movement, face image, and speech data; lip movement and face image is considered to update lstmcell parameters which have speech input) to update gate of a view. Main issue in implementing this is to get access to the back-propagation part through pytorch. Whether I can simply backprop using pytorch’s autograd or do I have to implement truncated backpropagation ? If I have to implement truncated backpropagation, then can I do it by using autograd function?