Hi,

I’m trying to implement the multi-label loss function described in the following CVPR 2017 paper:

https://arxiv.org/abs/1705.09759

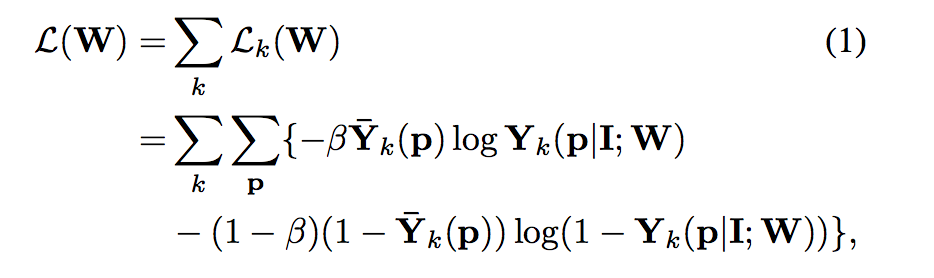

Which is reproduced below:

In this loss function, Y(hats) are ground truth tensors of size [numchannels, width, height], where every channel contains a binary image. This particular paper tries to find category-specific contours in images, which are obviously extremely unbalanced so they introduce a beta term to weight the loss function. p are the pixels in the image.

I implemented the following class for the loss function but am unsure whether or not it is correct.

class MultiLLFuntion(nn.Module):

def __init__(self, beta): self.beta = beta

def forward(self, predictions, targets): """Multilabel loss Args: predictions: a tensor containing pixel wise predictions shape: [batch_size, num_classes, width, height] ground_truth: a tensor containing binary labels shape: [batch_size, num_classes, width, height] """ log1 = torch.log(predictions) log2 = torch.log(1-predictions) term1 = torch.mul(torch.mul(targets, -self.beta), log1) term2 = torch.mul(torch.mul(1-targets, 1-self.beta), log2) sum_of_terms = term1 - term2 loss = torch.sum(sum_of_terms)

In this code, I assume that predictions is a tensor containing the likelihood of edge presence in each pixel: at the end of the network I’ll just pass a sigmoid through the last layer.

I am new to PyTorch and thus I’m not exactly sure how I should go about checking if this implementation is correct. If it isn’t, could someone help me get it right?