Hello,

I got a network trained to do a binary semantic segmentation but now I am trying to train it to segment 12 classes. The issue is that my code always interprets my target mask as all zeros. The target mask is read correctly and only gets messed up at this section of code:

def get_transform(phase,mean,std):

list_trans=[]

if phase=='train':

list_trans.extend([HorizontalFlip(p=0.5)])

list_trans.extend([Normalize(mean=mean,std=std,p=1),ToTensor()]) # normalizing the data & then converting to tensors

list_trans=Compose(list_trans)

return list_trans

I realized that my binary segmentation masks had a pixel depth of 1 whereas my 12 class masks are pixel depth 8 which may be getting messed up due to the following:

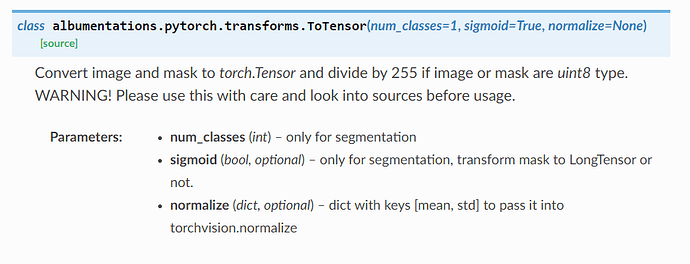

The version of ToTensor i’m utilizing is from albumentations:

So is the issue that because my masks are now uint8 so they’re being divided by 255 as mentioned in the documentation? I am not totally sure what the issue is, or if it’s what I suspect, not sure how to fix. Lots of people do multi class semantic seg so there has to be an explanation!

Any help appreciated.

Kyle