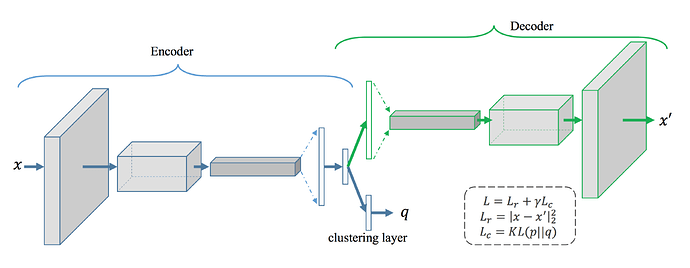

Hello - I’m working on a Convolutional Autoencoder model where I’m optimizing one cost on output layer and other cost on the bottleneck layer. Please see the picture below(I took it from a published paper). Please note I’m not minimizing any clustering loss on the bottleneck layer. My cost is a different one. I know how to back propagate the reconstruction loss but I’m not sure how to back propagate the gradient of the cost which I’m optimizing on the output of the bottleneck layer. Can anyone please help? Thank you.

Could you explain a bit how you are calculating the “gradient of the cost” and how the gradients should be calculated?

Based on the description it seems you would like to calculate the 2nd derivative?

Hi @ptrblck - My cost functions are standard MSE loss. I’m not calculating 2nd derivative. This is what I’m doing.

- From the forward pass capture the output of the bottleneck layer and the output layer.

Forward pass

output_en,output_de = self.forward(trInput)

-

Then calculate the reconstruction loss using MSE from the activation from the output layer.

#calculate reconstruction loss

loss = criterion(output_de,trOutput) -

Now calculate the cost from the output of the bottleneck layer.

secondCost = self.secondCost(output_en,L) -

Now add the second cost with the loss.

loss = loss + secondCost -

Now call the backward pass to update parameters.

Backward and optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()

Do you think this is the correct way? I’m unsure whether the parameters of the encoder will be updated or not by the second cost.

@ptrblck To be more specific: For the second loss I just want to update the parameters of the encoder, and I want to update the parameters of the whole network (encoder+decoder) for the reconstruction loss. But I’m not sure whether or not my current implementation will achieve what I want.

Thank you.

Your implementation is correct and the parameters of the encoder would accumulate the gradients from both losses, while the parameters of the decoder would only get the gradients from the reconstruction loss.

Thank you for confirming this!

Hi @ptrblck - I have one more query. I want to optimize a different cost using the activation from the bottleneck layer. I will calculate the cost using log of some quantity of bottleneck layer. This is what I’m thinking…

-

From the forward pass capture the output of the bottleneck layer and the output layer.

output_en,output_de = self.forward(trInput) -

First I’m calculating the reconstruction loss as shown below.

loss = criterion(output_de,trOutput) -

Then calculate the new cost using the bottleneck output.

M := some quantity I’m calculating from output_en

loss2 = -log(M) -

Now add the second cost with the loss.

loss = loss + loss2 -

Backward and optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()

The reconstruction loss is the standard MSE so I know that Torch can calculate the derivative. But the 2nd loss is a custom one. I’m not sure whether Torch can calculate the derivative. Do I need to write a new criterion for the 2nd cost? If so then how to do that?

Thank you,

Tomojit

As long as you are using PyTorch operations Autograd would be able to create the computation graph and calculate the gradients of the loss w.r.t. to all parameters in this graph.

You can verify it by checking the .grad attributes of all encoder parameters before the first backward call (should be None) and afterwards (should have valid values).