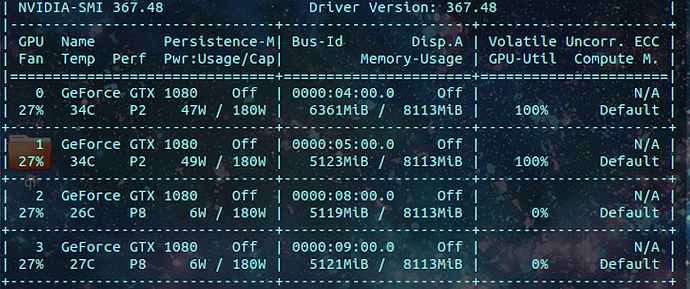

Recently I tried to train models in parallel using multiple GPUs (4 gpus). But the code always turns dead and the GPU situation is like this:

More specifically, when using only 2 gpus it works well. But when changing to more than 2 gpus, it doesn’t work at all…I’m not sure what’s going on and could someone give me some advices on that?