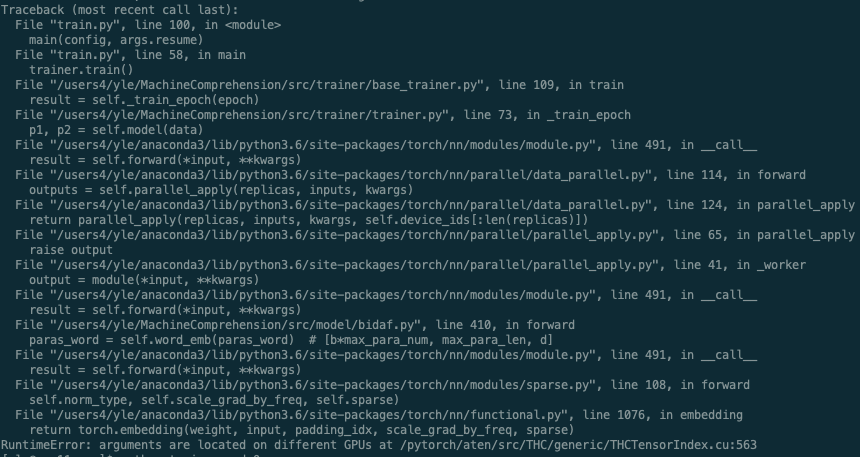

The following is a traceback, and I think at the moment that the error is due to having to models - generator and discriminator applied with DataParallel separately leading to the following issue at D(G(z)).

What’s the way to fix this?

File "/home/jerin/code/fairseq/fairseq/models/lstm.py", line 207, in forward

x = self.embed_tokens(src_tokens)

File "/home/jerin/.local/lib/python3.5/site-packages/torch/nn/modules/module.py", line 477, in __call__

result = self.forward(*input, **kwargs)

File "/home/jerin/.local/lib/python3.5/site-packages/torch/nn/modules/sparse.py", line 110, in forward

self.norm_type, self.scale_grad_by_freq, self.sparse)

File "/home/jerin/.local/lib/python3.5/site-packages/torch/nn/functional.py", line 1110, in embedding

return torch.embedding(weight, input, padding_idx, scale_grad_by_freq, sparse)

RuntimeError: arguments are located on different GPUs at /pytorch/aten/src/THC/generic/THCTensorIndex.cu:513

Exception ignored in: <bound method tqdm.__del__ of | epoch 000: 0%| | 0/3750 [00:08<?, ?it/s]>