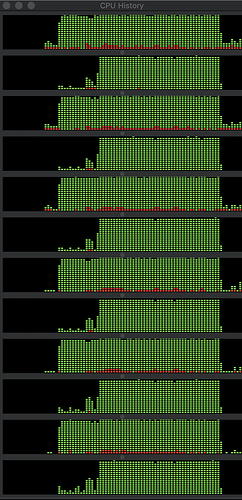

I’m trying to have different neural networks run in parallel on different CPUs but am finding that it isn’t leading to any sort of speed up compared to running them sequentially.

Below is my code that replicates the issue exactly. If you run this code it shows that with 2 processes it takes roughly twice as long as running it with 1 process but really it should take the same amount of time.

import time

import torch.multiprocessing as mp

import gym

import numpy as np

import copy

import torch.nn as nn

import torch

class NN(nn.Module):

def __init__(self, output_dim):

nn.Module.__init__(self)

self.fc1 = nn.Linear(4, 50)

self.fc2 = nn.Linear(50, 500)

self.fc3 = nn.Linear(500, 5000)

self.fc4 = nn.Linear(5000, output_dim)

self.relu = nn.ReLU()

def forward(self, x):

x = self.relu(self.fc1(x))

x = self.relu(self.fc2(x))

x = self.relu(self.fc3(x))

x = self.fc4(x)

return x

def Worker(ix):

print("Starting training for worker ", ix)

env = gym.make('CartPole-v0')

model = NN(2)

for _ in range(2000):

model(torch.Tensor(env.reset()))

print("Finishing training for worker ", ix)

def overall_process(num_workers):

workers = []

for ix in range(num_workers):

worker = mp.Process(target=Worker, args=(ix, ))

workers.append(worker)

[w.start() for w in workers]

for worker in workers:

worker.join()

print("Finished Training")

print(" ")

start = time.time()

overall_process(1)

print("Time taken: ", time.time() - start)

print(" ")

start = time.time()

overall_process(2)

print("Time taken: ", time.time() - start)

Does anyone know why this might be happening and how to fix it?

I thought that it is maybe because PyTorch networks automatically implement CPU parallelism in the background and so I tried adding the below 2 lines but it doesn’t always resolve the issue:

torch.set_num_threads(1)

torch.set_num_interop_threads(1)